Langbase Docs

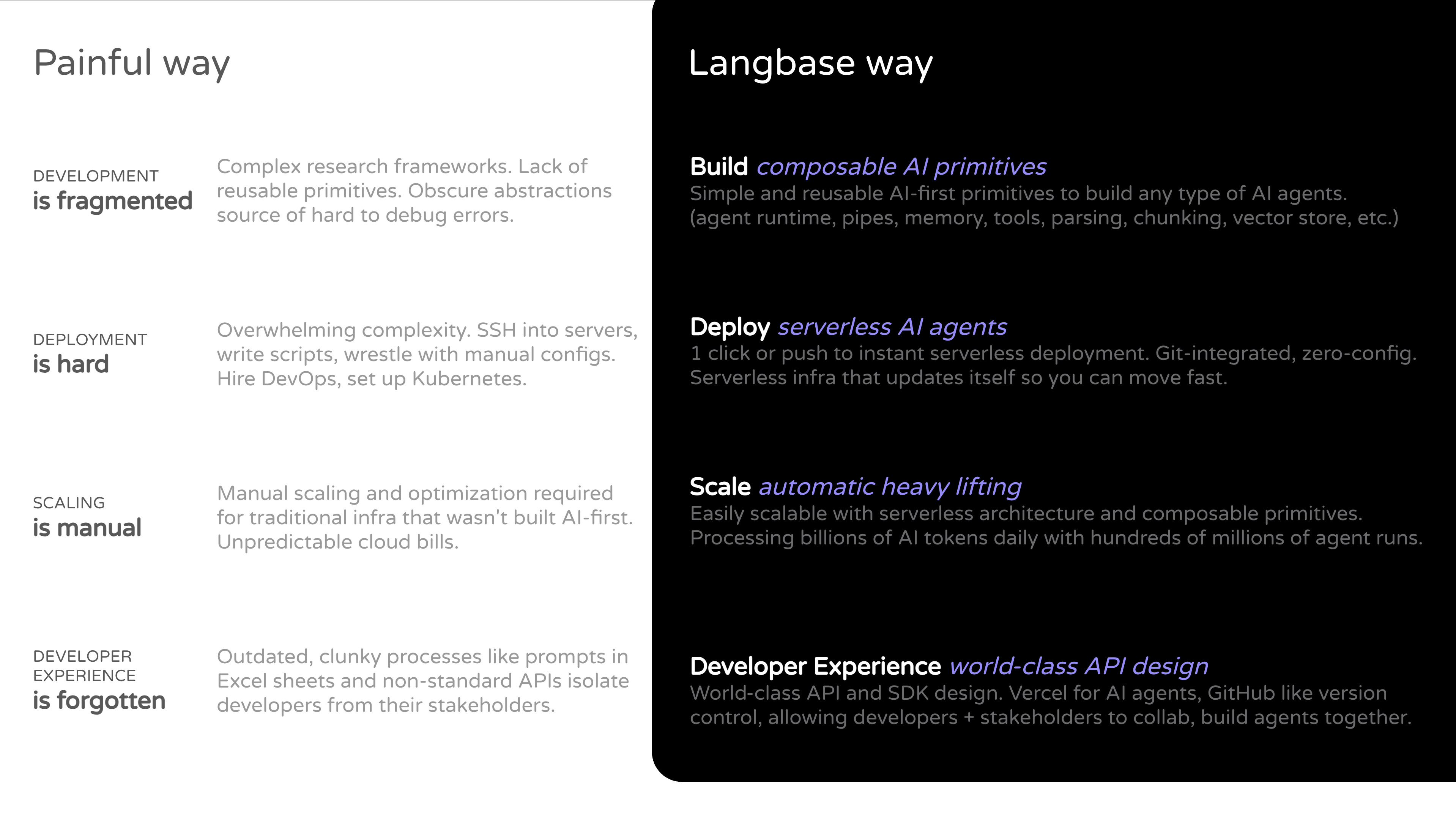

Langbase is the most powerful serverless AI cloud for building and deploying AI agents.

Build, deploy, and scale serverless AI agents with tools and memory (RAG). Simple AI primitives no bloated frameworks, all with a world-class developer experience without using any frameworks.

| Products | Description |

|---|---|

| Command.new | Vibe code any AI agent. Command turns prompts into prod-ready agents |

| Pipe Agents | Pipes are a standard way to build serverless AI agents with tools and memory |

| AI Memory | Auto RAG AI primitve for agents to give them human like long-term memory |

| Workflow | Build multi-step agents with built-in durable features like timeouts and retries |

| Threads | Store and manage AI agents context and conversation history without managing DBs |

| Agent | Agents is an AI primitive to build serverless agents with unified API over 600+ LLMs |

| Parser | AI primitive to extract text content from various document formats, for RAG |

| Chunker | AI primitive to split text into smaller, manageable chunks for context, for RAG |

| Embed | AI primitive to convert text into vector embeddings unified API multiple models, for RAG |

| Tools | Pre-built and hosted tools for AI agents like web crawler and web search with Exa |

| Langbase SDK | Easiest wasy to build AI Agents with TypeScript. (recommended) |

| HTTP API | Build AI agents with any language (Python, Go, PHP, etc.) |

| AI Studio | Langbase studio is your playground to build, collaborate, and deploy AI agents. Experiment on your agent changes and memory retrieval in real-time, with real data, store messages, version your prompts, optimize cost, see usage, and traces. Access Langbase Studio. A complete AI developers platform. - Collaborate: Invite all team members to collaborate on the pipe. Build AI together. - Developers & Stakeholders: Work with all your R&D team, engineering, product, GTM (marketing and sales). It's like a powerful version of GitHub x Google Docs for AI. |

Langbase is the best way to build and deploy AI agents.

Our mission: AI for all. Not just ML wizards. Every. Single. Developer.

Build AI agents without any bloated frameworks. You write the logic, we handle the logistics.

Compared to complex AI frameworks, Langbase is serverless and the first composable AI platform.

- Start by building simple AI agents (pipes)

- Then train serverless semantic Memory agents (RAG) to get accurate and trusted results

Build Context-Engineered AI Agents

Learn to create context-engineered agents that use memory and AI primitives to take action and deliver accurate, production-ready results. Build your first Agentic RAG system using Langbase Pipes, Memory, and Tools — no frameworks needed.

Docs AI Agent: AI in docs

Build an AI agent with RAG that answers questions from your docs.

AI Email Agent: Handle your emails

Build a composable multi agent architecture using Langbase SDK.

Build your own v0, Lovable, or Bolt

Build a coding agent like v0, lovable, bolt with Langbase SDK.

Understand how RAG works

Understand how RAG works and how to use it in your app.

Langbase Agent Examples

Explore open-source Langbase agent examples on GitHub.

Internet Research Agent Tool

Build a tool for AI agents that can help you research on the internet.