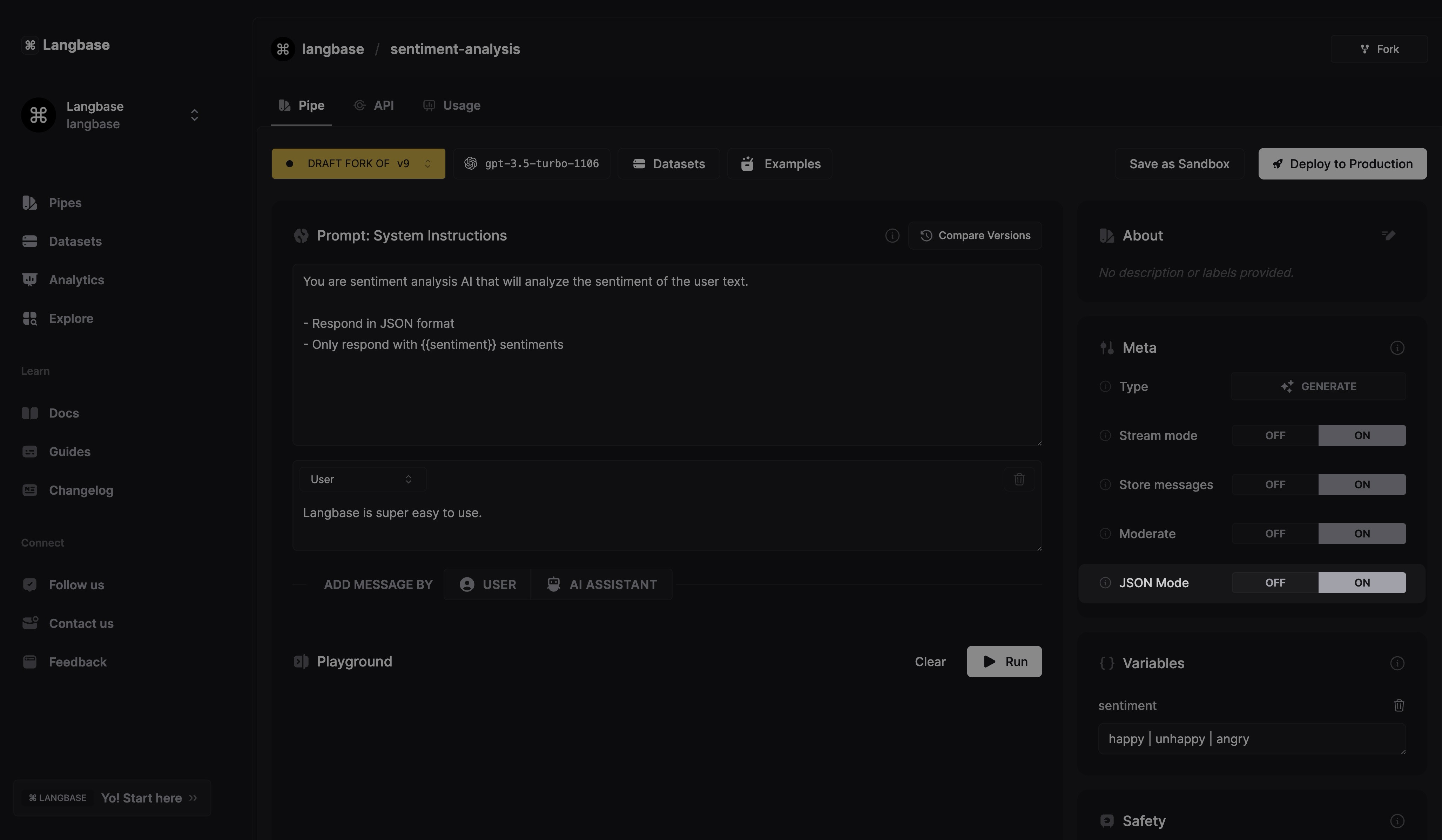

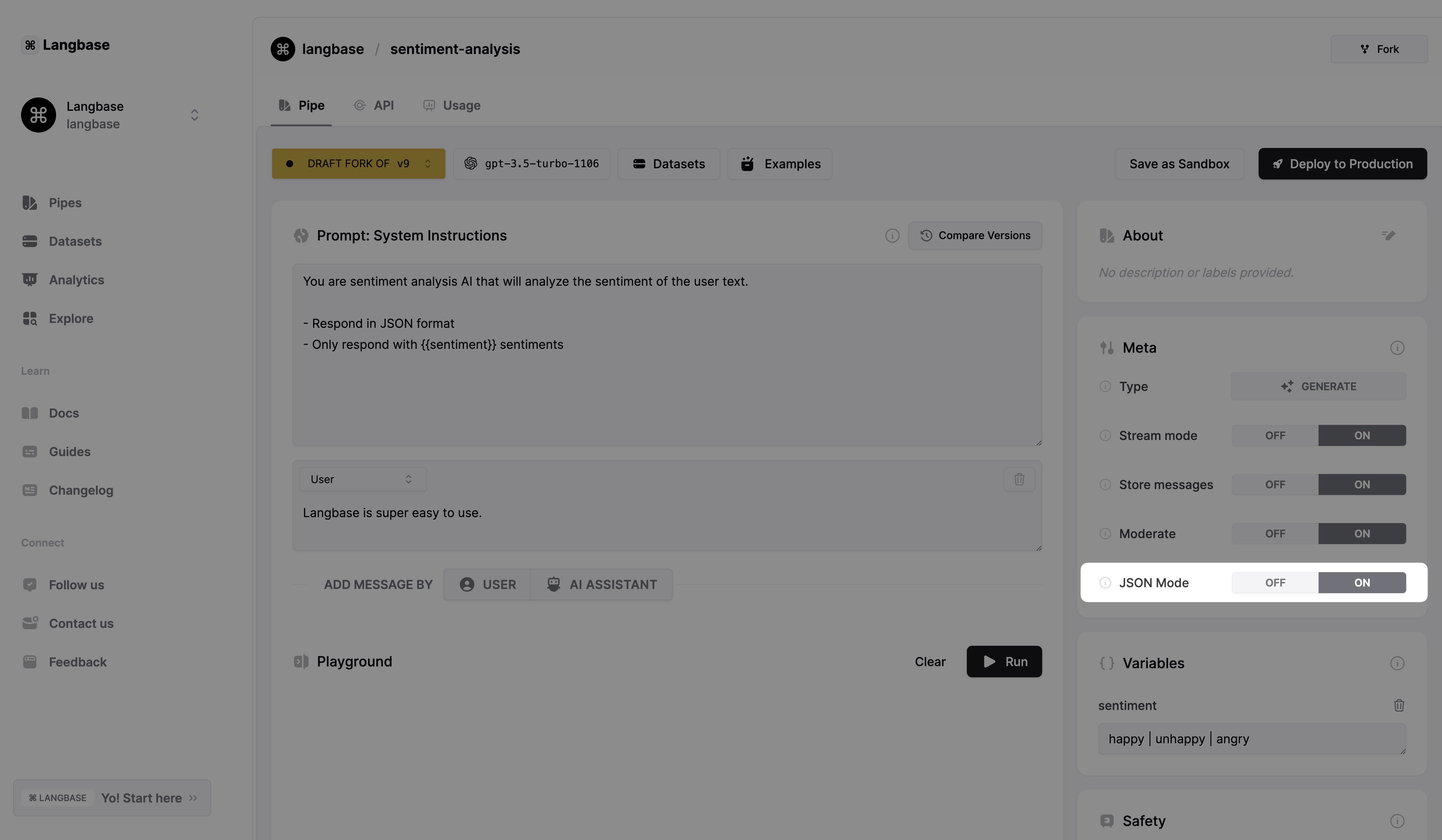

JSON Mode

JSON mode instructs the LLM to give output in JSON and asks it to conform to a provided schema in the prompt. To activate JSON mode, you need to select a model that supports it.

Currently, the following models support JSON mode.

OpenAI

gpt-5.1-2025-11-13gpt-5-2025-08-07gpt-5-nano-2025-08-07gpt-5-mini-2025-08-07gpt-5-chat-latestgpt-oss-120bgpt-oss-20bo3-minigpt-4.1gpt-4.1-minigpt-4.1-nanochatgpt-4o-latestgpt-4.5-previewo3-minigpt-4ogpt-4o-minigpt-4o-2024-11-20gpt-4o-2024-08-06gpt-4-turbogpt-4-turbo-previewgpt-4-0125-previewgpt-3-0125-previewgpt-3.5-turbogpt-3.5-turbo-0125gpt-3.5-turbo-1106

gemini-2.5-pro-preview-06-05gemini-2.5-pro-preview-05-20gemini-2.5-pro-preview-05-06gemini-2.0-flashgemini-2.0-flash-litegemini-1.5-progemini-1.5-flashgemini-1.5-flash-8b

Claude

claude-3.7-sonnet:thinking

Together

mistralai/magistral-medium-2506:thinkingMistral-7B-Instruct-v0.1Mixtral-8x7B-Instruct-v0.1

Deepseek

deepseek-chat-v3.1,deepseek-chat-v3.1:thinkingdeepseek-chat-v3-0324:freedeepseek-chat-v3-0324deepseek-chat

azureopenai:o3-miniazureopenai:gpt-4.5-previewazureopenai:o1-miniazureopenai:gpt-4oazureopenai:gpt-4o-mini

To use JSON mode, ensure that you have selected a model that supports it. You should see a JSON mode toggle in the Pipe IDE. Turn the toggle ON to activate JSON mode.

Additionaly, you can also provide a schema in the system prompt or messages to further optimize the output.

Alternative

If you are using a model that does not support JSON mode, try asking the model to produce an output in JSON and providing the schema in your prompt. The LLM will try to conform to the schema as much as possible.