Stream

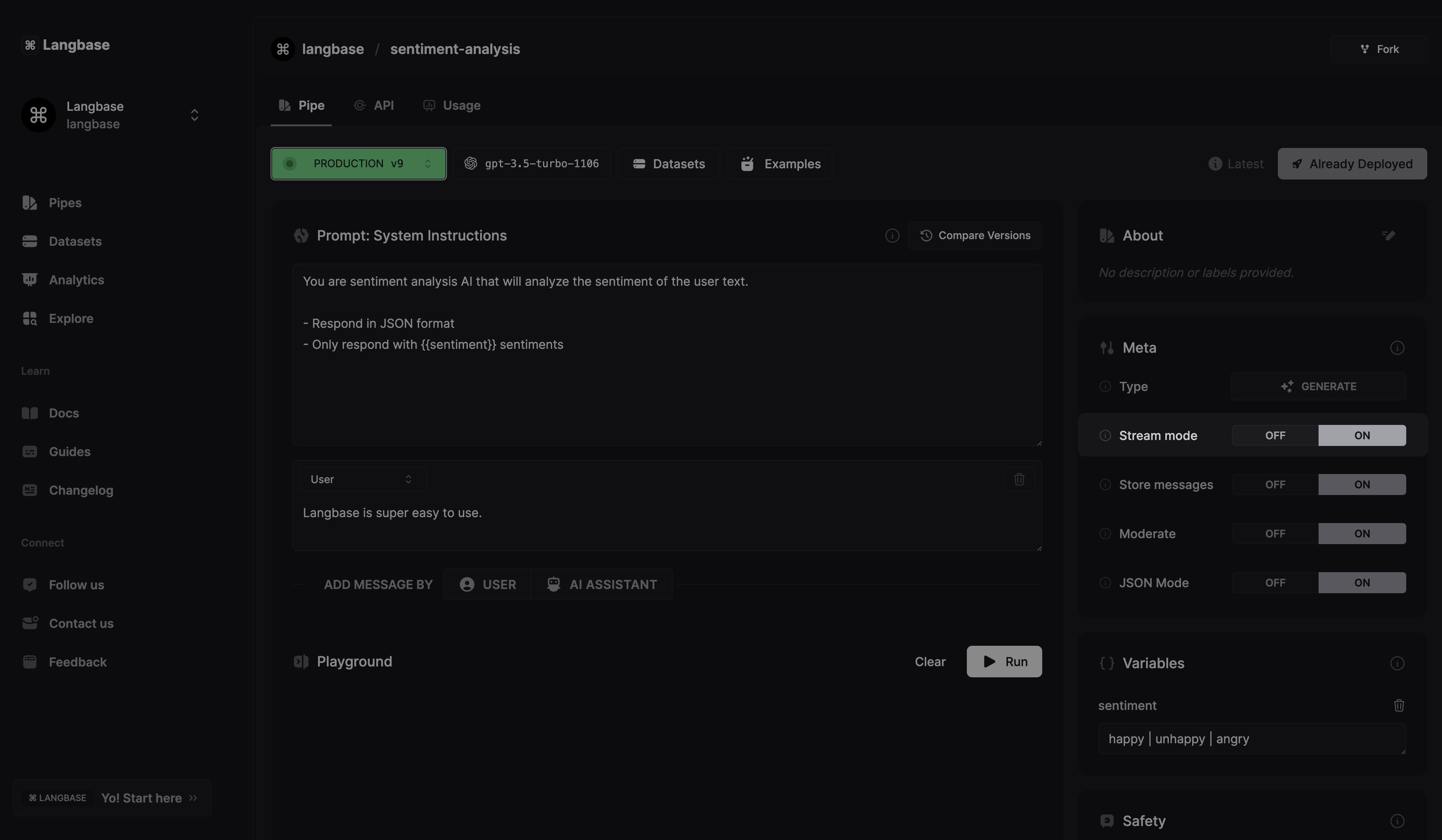

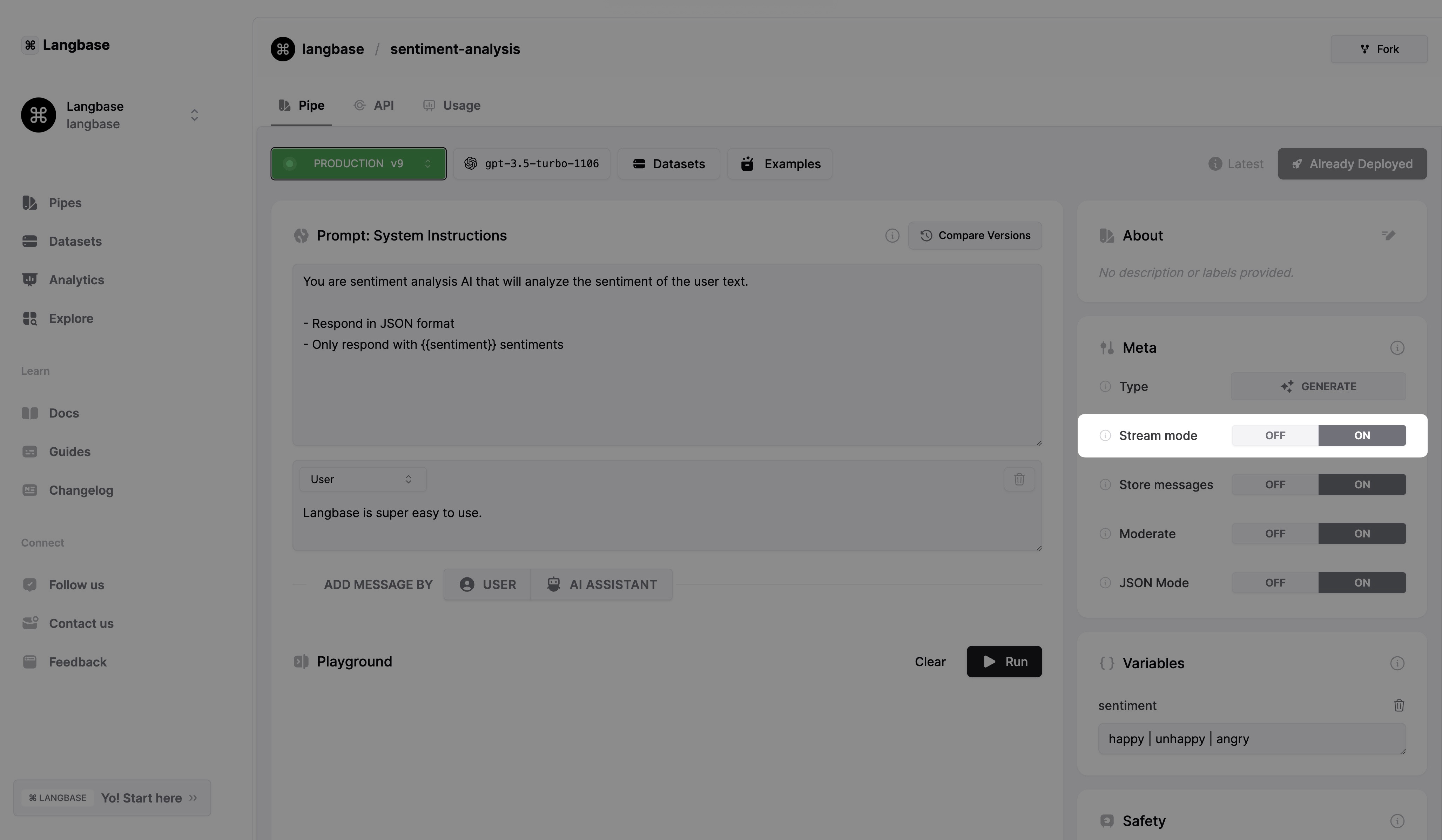

Pipes support streaming LLM responses for all of its supported LLM models on both its API and Langbase dashboard.

Streaming can be enabled or disabled inside a Pipe if required.

When streaming is enabled, the API will return a streaming response in OpenAI SSE format. The response will be a series of JSON objects, each representing a chunk of the completion. To implement streaming, you will need to parse the response and display the chunks as they arrive.

Parsing the stream is non-trivial, and you may want to use a library to help with this. Any library that helps with OpenAI streaming will work with Langbase Pipe's streaming responses. You can also check our Chatbot Example code to implement streaming in your app.

Here is what a streaming response looks like.

Streaming response

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"role":"assistant","content":""},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":"Hello"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":"!"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":" How"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":" can"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":" I"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":" assist"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":" you"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":" today"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{"content":"?"},"logprobs":null,"finish_reason":null}]}

{"id":"chatcmpl-9gDTI1K8XnBBhGou2ZnF7wQRU3M7r","object":"chat.completion.chunk","created":1719848588,"model":"gpt-3.5-turbo-0125","system_fingerprint":null,"choices":[{"index":0,"delta":{},"logprobs":null,"finish_reason":"stop"}]}