Guide: Retrieval Augmented Generation (RAG)

A step-by-step guide to implement RAG using Langbase Pipes and Memory.

In this guide, we will build a RAG application that allows users to ask questions from their documents. We call it Documents QnA RAG App. You can use it to ask questions from documents you uploaded to Langbase Memory.

First, we will create a Langbase memory, upload data to it, and then connect it to a pipe. We will then create a Next.js application that uses Langbase SDK to use the pipe to generate responses.

Let's get started!

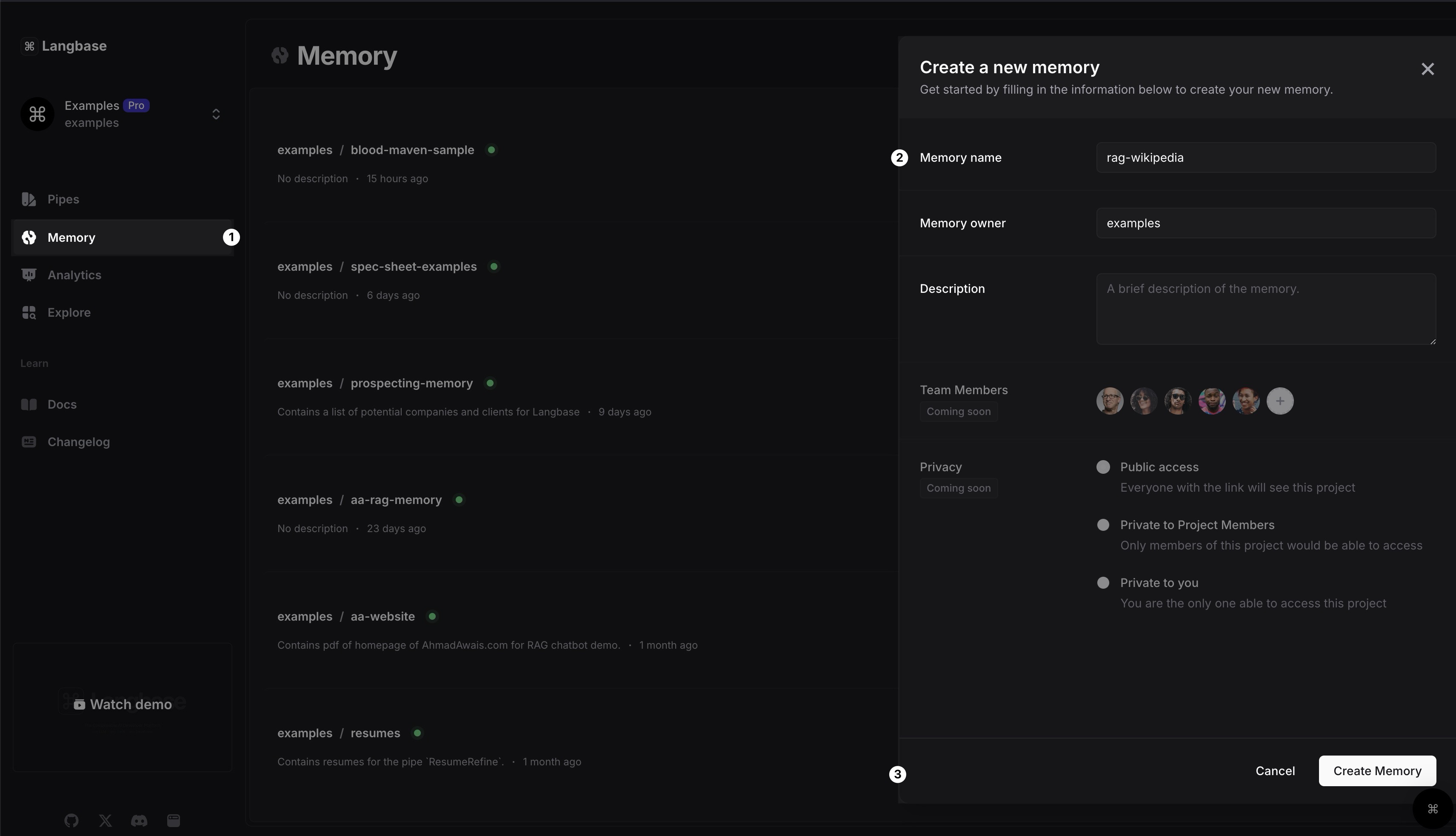

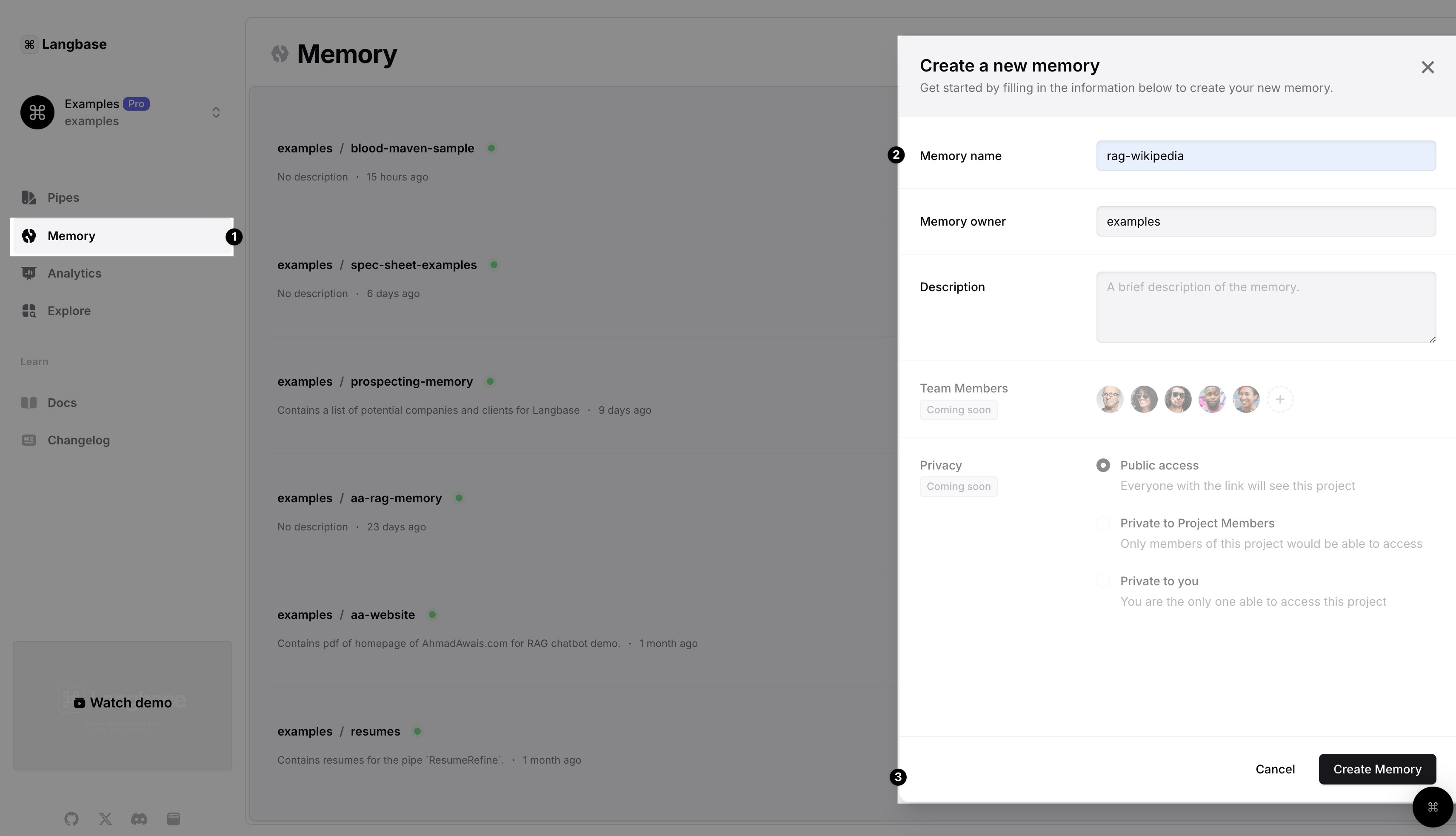

Step #1

In the Langbase dashboard, navigate to the Memory section, create a new memory and name it rag-wikipedia. You can also add a description to the memory.

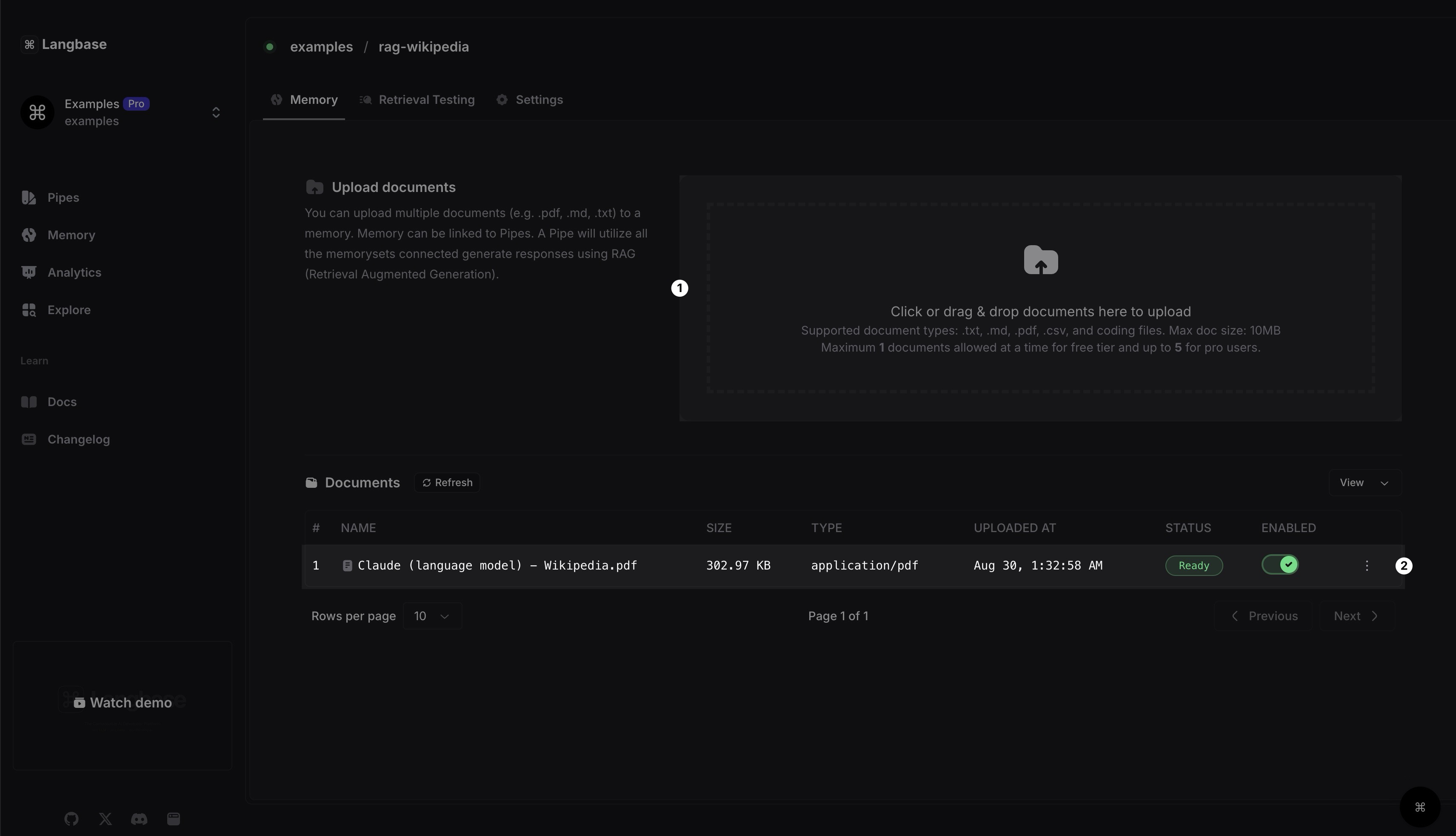

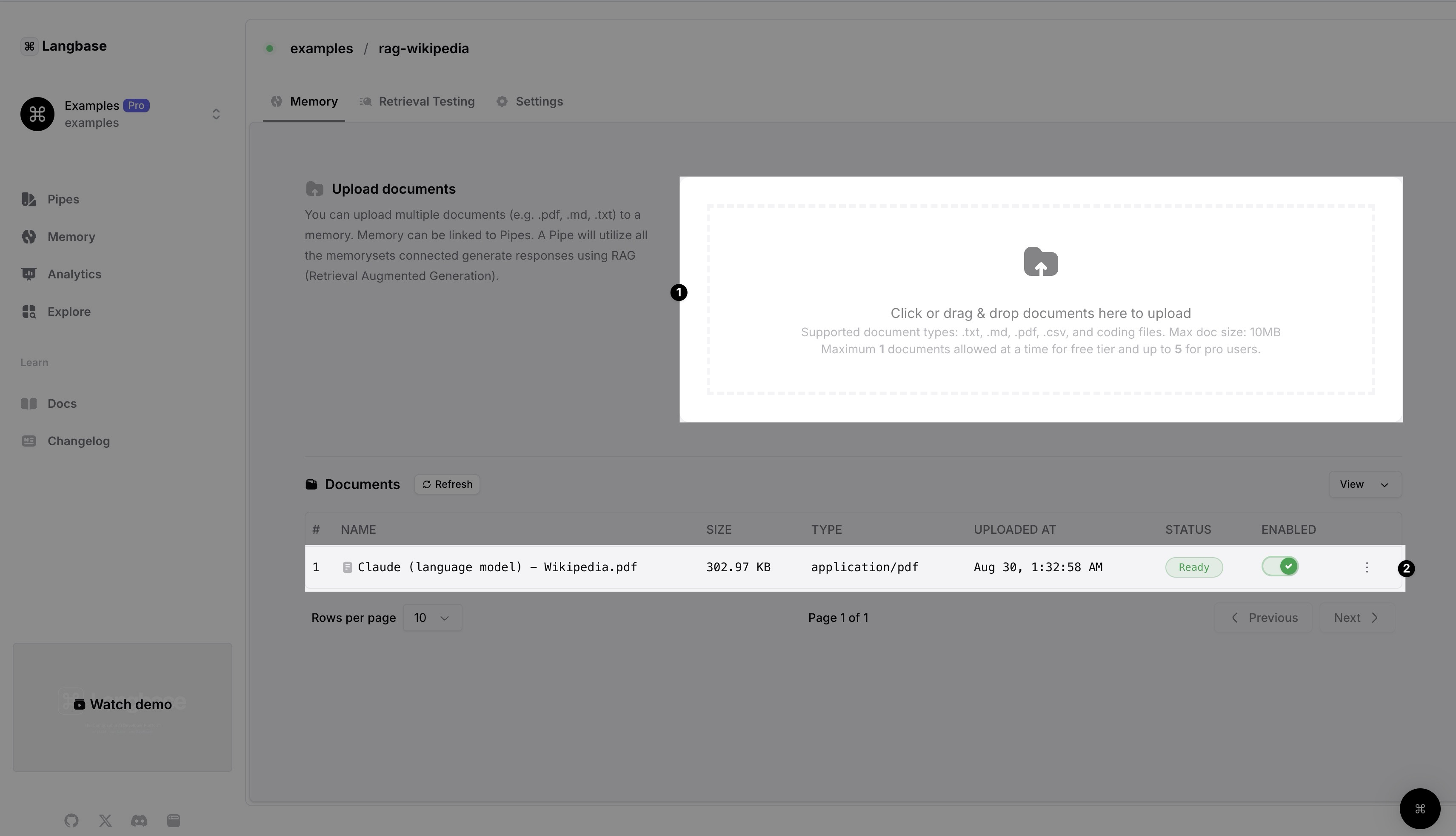

Step #2

Upload the data to the memory you created. You can upload any data for your RAG. For this example, we uploaded a PDF file of the Wikipedia page of Claude.

You can either drag and drop the file or click on the upload button to select the file. Once uploaded, wait a few minutes to let Langbase process the data. Langbase takes care of chunking, embedding, and indexing the data for you.

Click on the Refresh button to see the latest status. Once you see the status as Ready, you can move to the next step.

Create Memory and Upload Data using Langbase Memory API

Alternatively, we can use the Langbase Memory API to create and upload the data as well.

Create memory and upload data using Langbase Memory API

Create a Memory

async function createNewMemory() {

const url = 'https://api.langbase.com/v1/memory';

const apiKey = '<YOUR_API_KEY>';

const memory = {

name: "rag-wikipedia",

description: "This memory contains content of AhmadAwais.com homepage",

};

const response = await fetch(url, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

Authorization: `Bearer ${apiKey}`,

},

body: JSON.stringify(memory),

});

const newMemory = await response.json();

return newMemory;

}

You will get a response with the newly created memory object:

{

"name": "rag-wikipedia",

"description": "This memory contains content of AhmadAwais.com homepage",

"owner_login": "langbase",

"url": "https://langbase.com/memorysets/langbase/rag-wikipedia"

}

Upload Data

Uploading data is a two-step process. First, we get a signed URL to upload the data. Then, we upload the data to the signed URL. Let's see how to get a signed URL:

async function getSignedUploadUrl() {

const url = 'https://api.langbase.com/v1/memory/documents';

const apiKey = '<YOUR_API_KEY>';

const newDoc = {

memoryName: 'rag-wikipedia',

ownerLogin: 'langbase',

fileName: 'ahmadawais-homepage.pdf',

};

const response = await fetch(url, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

Authorization: `Bearer ${apiKey}`,

},

body: JSON.stringify(newDoc),

});

const res = await response.json();

return res;

}

Response will contain the signed URL; something like this:

{

"signedUrl": "https://b.langbase.com/..."

}

Now that we have the signed URL, we can upload the data to it:

const fs = require("fs");

async function uploadDocument(signedUrl, filePath) {

const file = fs.readFileSync(filePath);

const response = await fetch(signedUrl, {

method: 'PUT',

headers: {

'Content-Type': 'application/pdf',

},

body: file,

});

return response;

}

You should get a response with status 200 if the upload is successful. You can upload many types of files as well. Check out Memory API for more details.

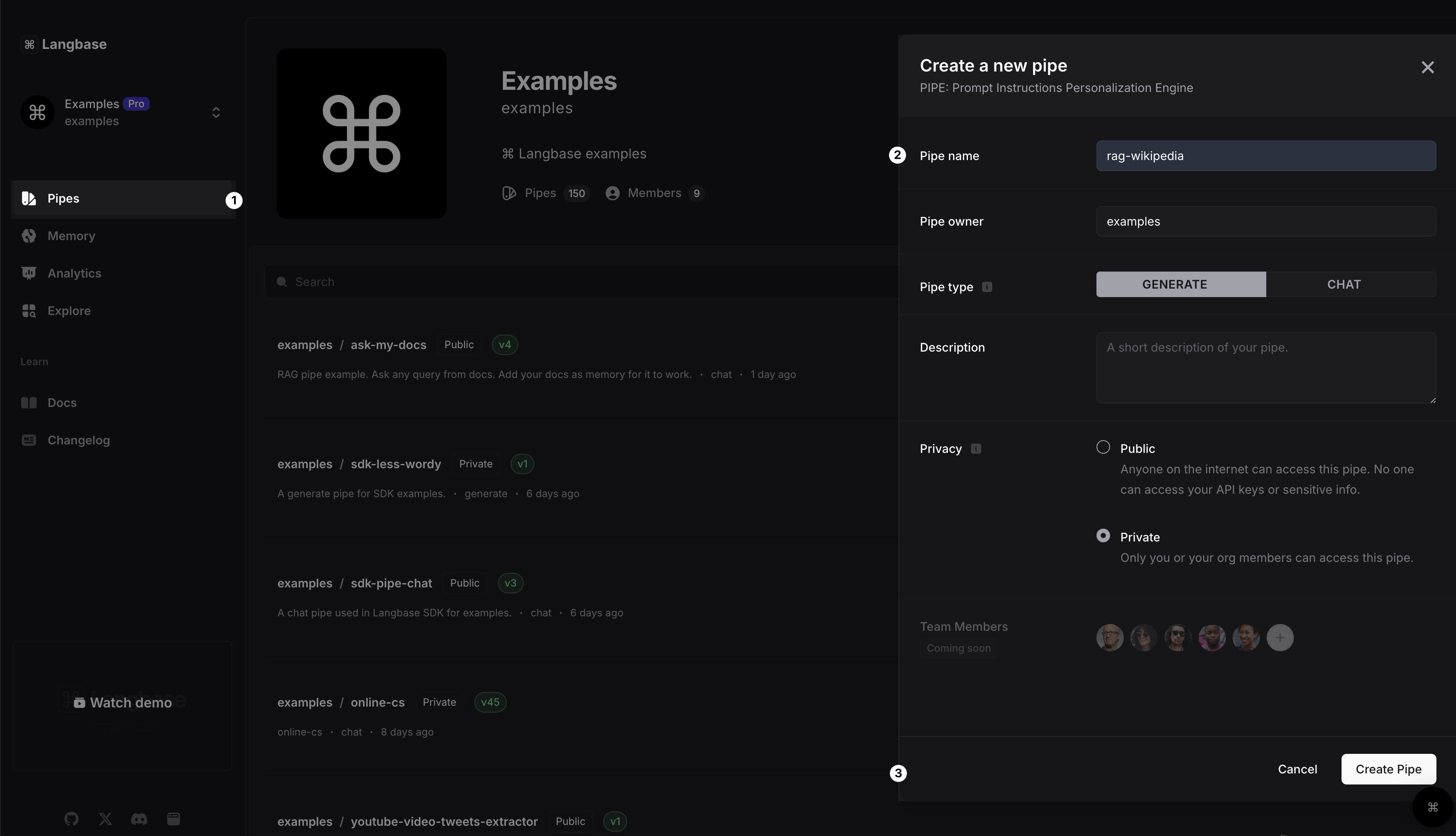

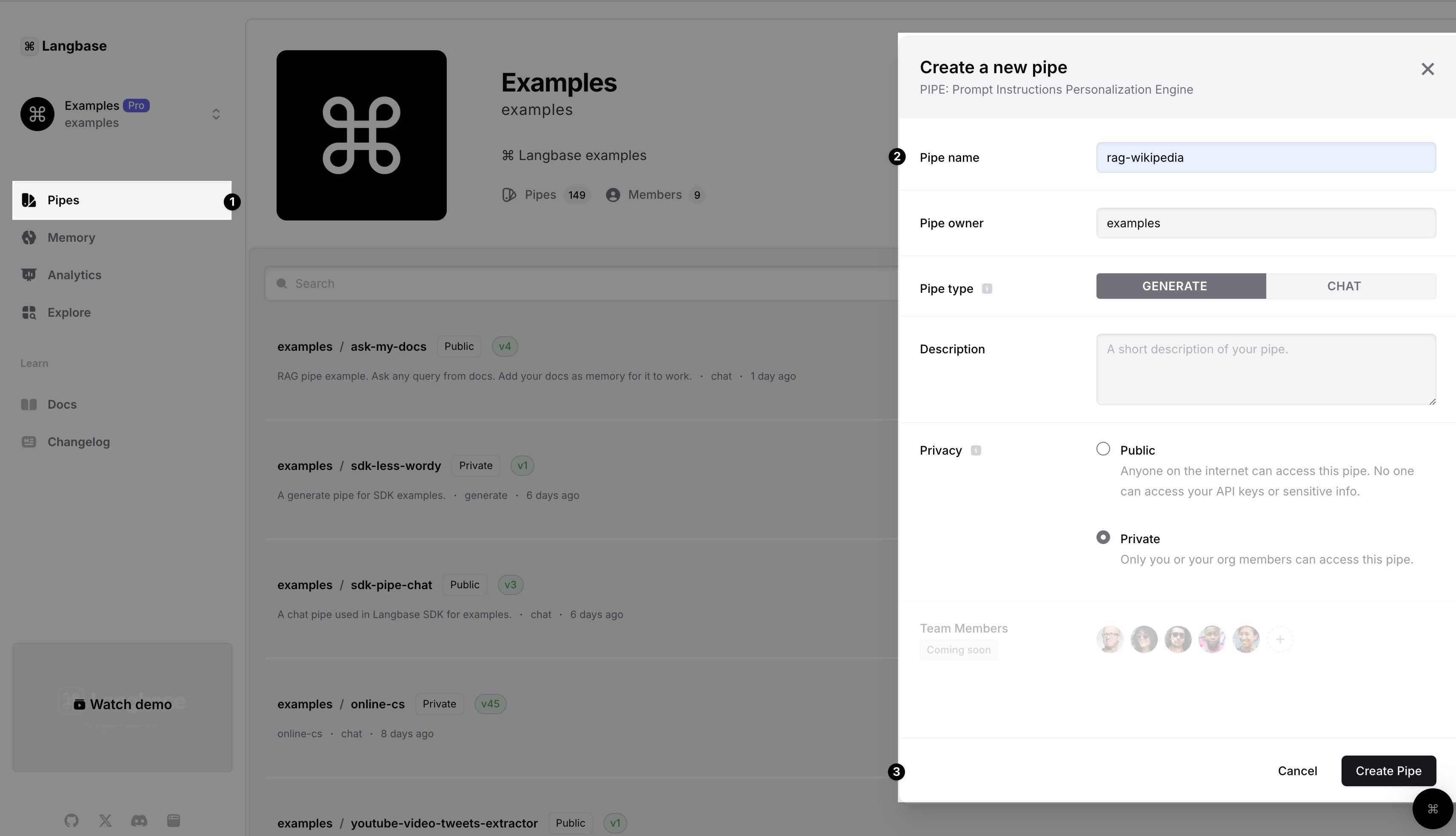

Step #4

In your Langbase dashboard, create a new pipe and name it rag-wikipedia. You can also add a description to the pipe.

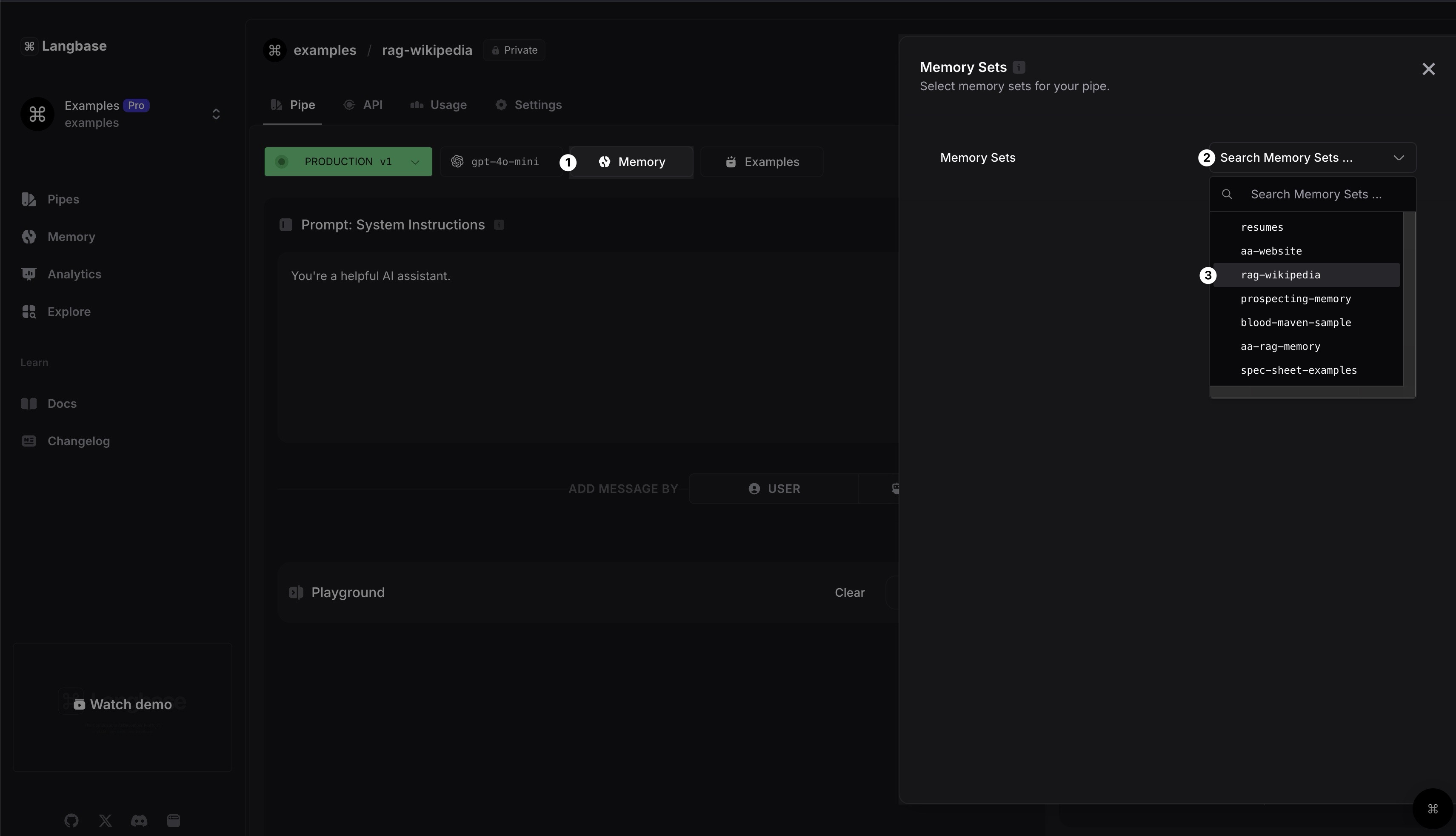

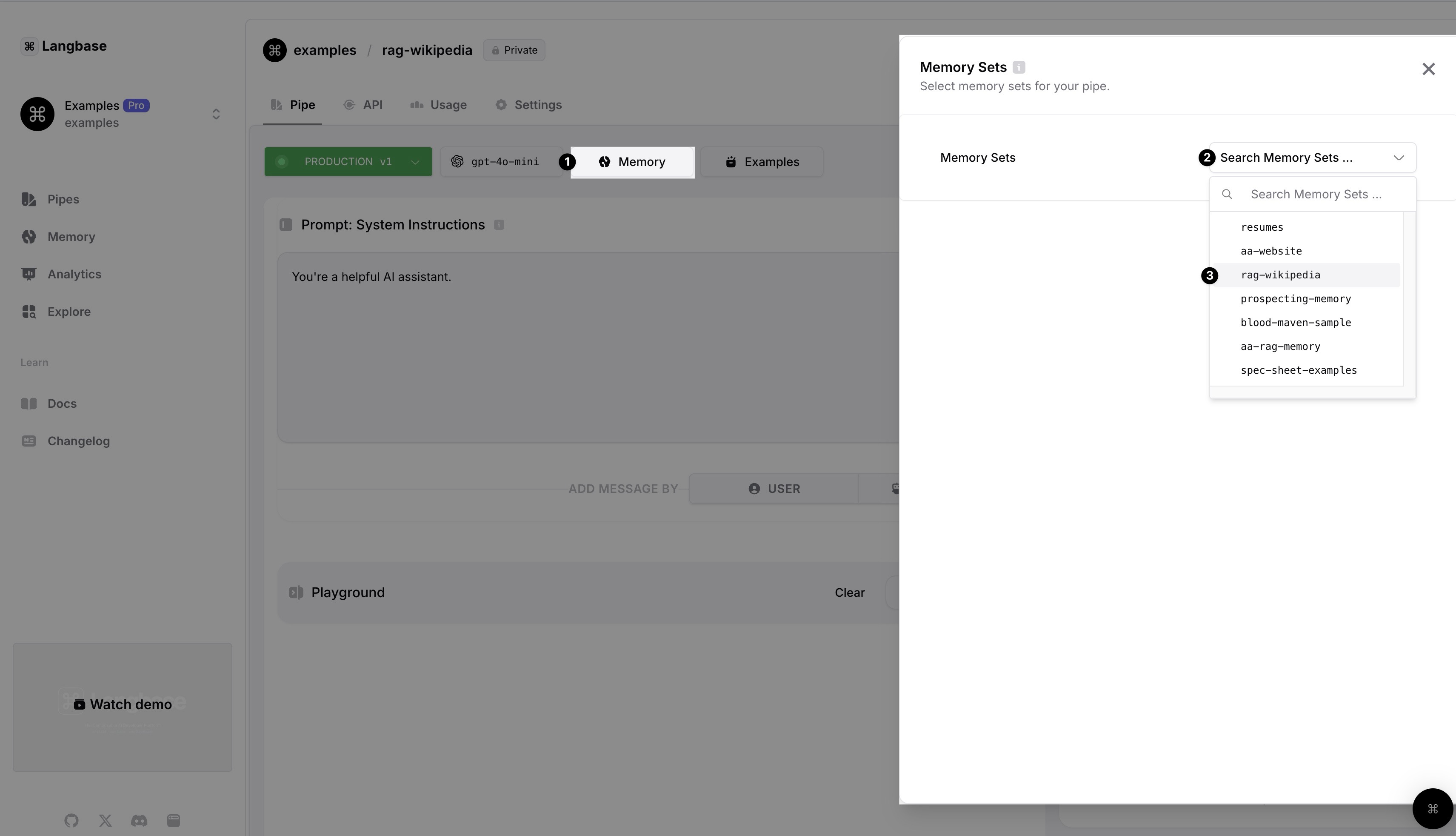

Step #5

Open the newly created pipe and click on the Memory button. From the dropdown, select the memory you created in the previous step and that's it.

Now that we have created a memory, uploaded data to it, and connected it to a pipe, we can create a Next.js application that uses Langbase SDK to generate responses.

Step #6

Clone the RAG Starter Project to get started. The app contains a single page with a form to ask a question about documents. This project uses:

- Langbase SDK

- Langbase Pipe

- Langbase Memory

- Next.js

- Tailwind CSS

Step #7

Install the dependencies using the following command:

npm install

Install the Langbase SDK using the following command:

npm install langbase

Step #8

Create a route app/api/generate/route.ts and add the following code:

import { Pipe } from 'langbase';

import { NextRequest } from 'next/server';

/**

* Generate response and stream from Langbase Pipe.

*

* @param req

* @returns

*/

export async function POST(req: NextRequest) {

try {

if (!process.env.LANGBASE_PIPE_API_KEY) {

throw new Error(

'Please set LANGBASE_PIPE_API_KEY in your environment variables.'

);

}

const { prompt } = await req.json();

// 1. Initiate the Pipe.

const pipe = new Pipe({

apiKey: process.env.LANGBASE_PIPE_API_KEY

});

// 2. Generate a stream by asking a question

const stream = await pipe.streamText({

messages: [{ role: 'user', content: prompt }]

});

// 3. Done, return the stream in a readable stream format.

return new Response(stream.toReadableStream());

} catch (error: any) {

return new Response(error.message, { status: 500 });

}

}

Step #9

Go to starters/documents-qna-rag/components/langbase/docs-qna.tsx and add following import:

import { fromReadableStream } from 'langbase';

Add the following code in DocsQnA component after the states declaration:

const handleSubmit = async (e: React.FormEvent) => {

// Prevent form submission

e.preventDefault();

// Prevent empty prompt or loading state

if (!prompt.trim() || loading) return;

// Change loading state

setLoading(true);

setCompletion('');

setError('');

try {

// Fetch response from the server

const response = await fetch('/api/generate', {

method: 'POST',

body: JSON.stringify({ prompt }),

headers: { 'Content-Type': 'text/plain' },

});

// If response is not successful, throw an error

if (response.status !== 200) {

const errorData = await response.text();

throw new Error(errorData);

}

// Parse response stream

if (response.body) {

// Stream the response body

const stream = fromReadableStream(response.body);

// Iterate over the stream

for await (const chunk of stream) {

const content = chunk?.choices[0]?.delta?.content;

content && setCompletion(prev => prev + content);

}

}

} catch (error: any) {

setError(error.message);

} finally {

setLoading(false);

}

};

Replace the following piece of code in DocsQnA component:

onSubmit={(e) => {

e.preventDefault();

}}

With the following code:

onSubmit={handleSubmit}

Step #10

Create a copy of .env.local.example and rename it to .env.local. Add the API key of the pipe that we created in step #4 to the .env.local file:

# !! SERVER SIDE ONLY !!

# Pipes.

LANGBASE_PIPE_API_KEY="YOUR_PIPE_API_KEY"

Step #11

Run the project using the following command:

npm run dev

Your app should be running on http://localhost:3000. You can now ask questions from the documents you uploaded to the memory.

🎉 That's it! You have successfully implemented a RAG application. It is a Next.js application, you can deploy it to any platform of your choice, like Vercel, Netlify, or Cloudflare.

You can see the live demo of this project here.

Further resources:

- Complete code on GitHub.

- Pipe used in this example on Langbase Pipes.