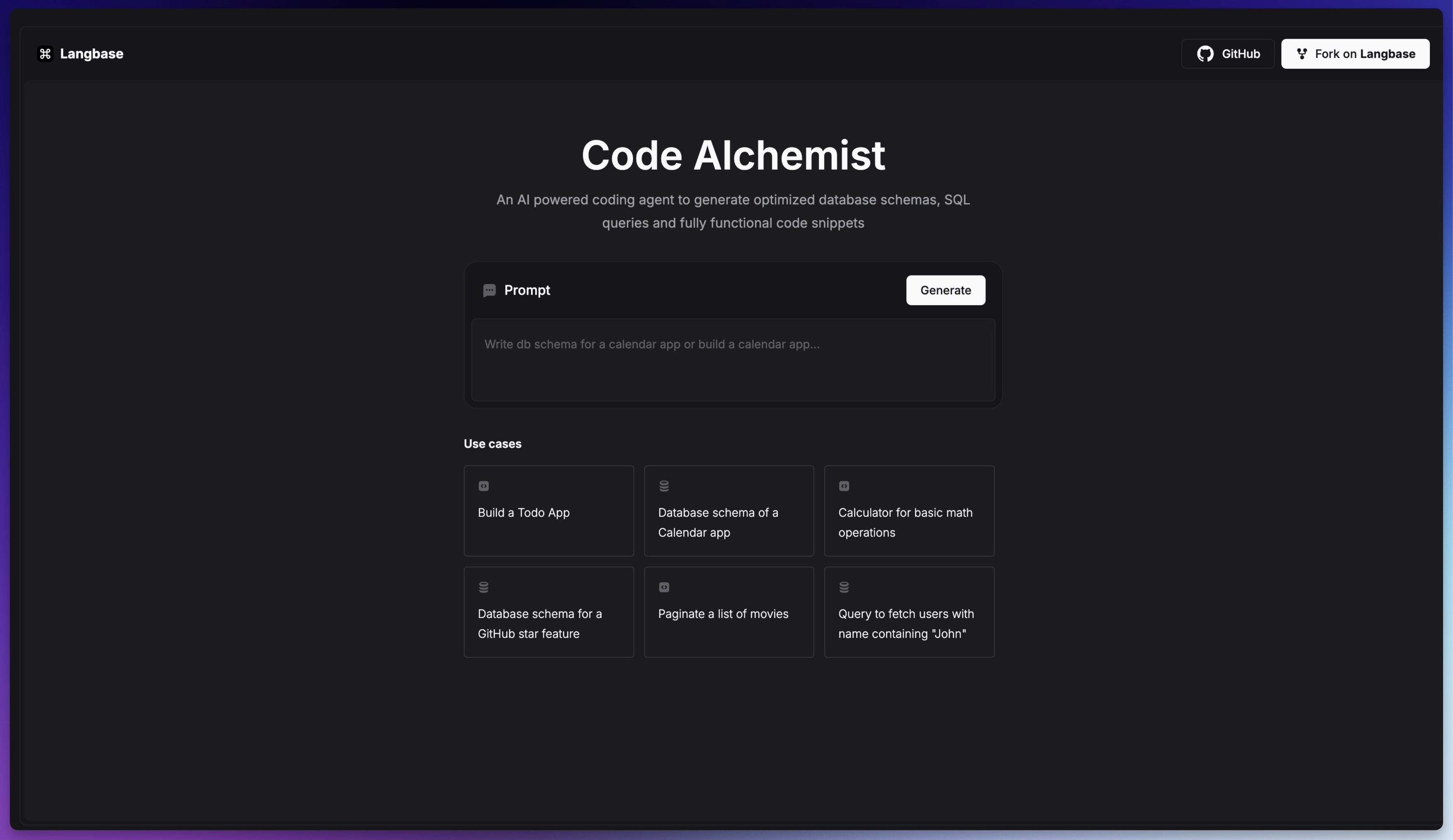

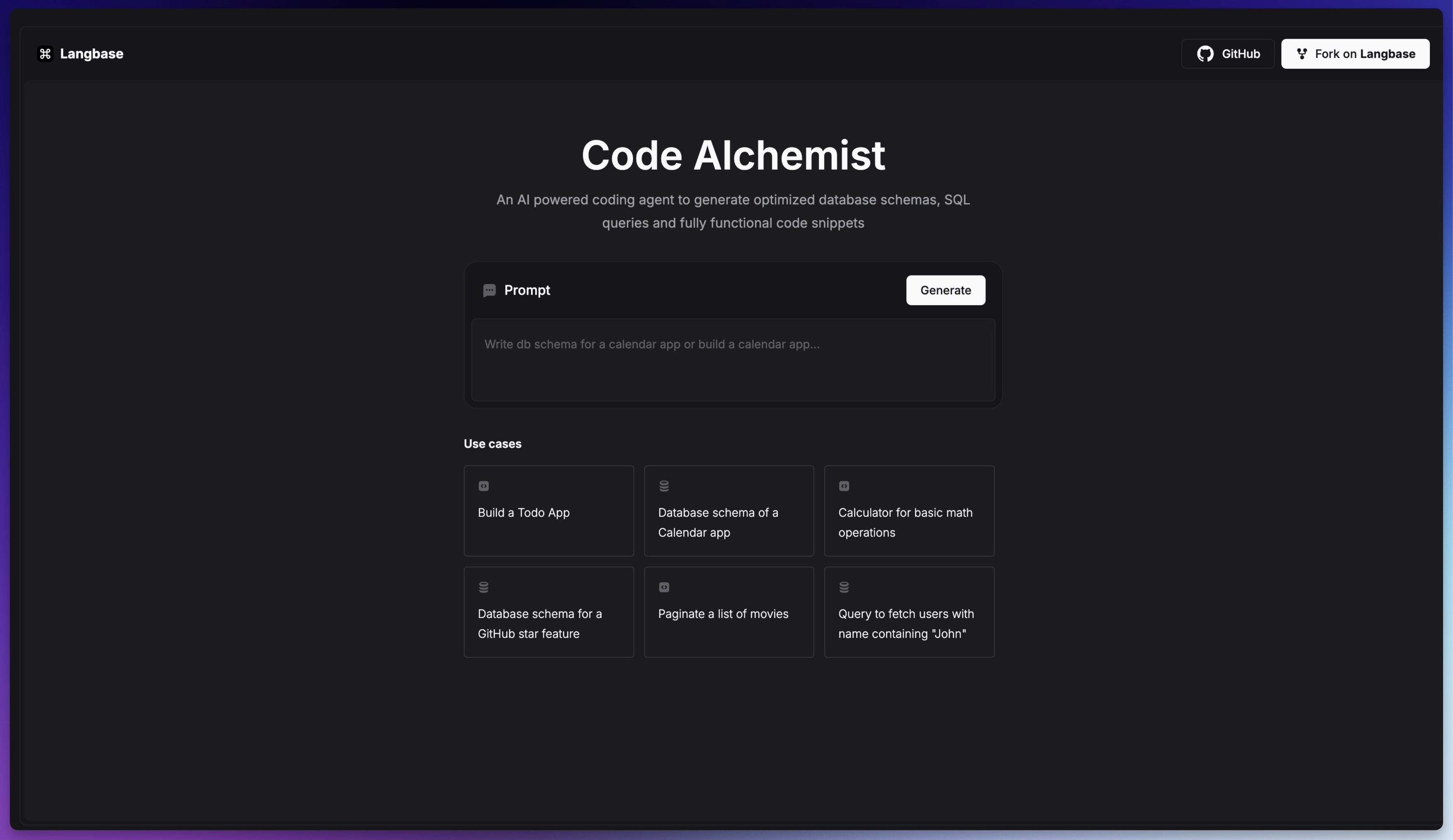

Build a composable AI Devin

A step-by-step guide to build an AI coding agent using Langbase SDK.

In this guide, you will build an AI coding agent, CodeAlchemist aka Devin, that uses multiple pipe agents to:

- Analyze the user prompt to identify if it's related to coding, database architecture, or a random prompt.

- ReAct based architecture that decides whether to call code pipe agent or database pipe agent.

- Generate raw React code for code query.

- Generate optimized SQL for database query.

You will create a basic Next.js application that will use the Langbase SDK to connect to the pipe agents and stream the final response back to user.

Let's get started!

Step #0

Let's quickly set up the project.

Initialize the project

To build the agent, you need to have a Next.js starter application. If you don't have one, you can create a new Next.js application using the following command:

Initialize project

npx create-next-app@latest code-alchemist

This will create a new Next.js application in the code-alchemist directory. Navigate to the directory and start the development server:

Run dev server

npm run dev

Install dependencies

Install the Langbase SDK in this project using npm or pnpm.

Run dev server

npm install langbase

Step #1

Every request you send to Langbase needs an API key. This guide assumes you already have one. In case, you do not have an API key, please check the instructions below.

Create an .env file in the root of your project and add your Langbase API key.

.env

LANGBASE_API_KEY=xxxxxxxxx

Replace xxxxxxxxx with your Langbase API key.

Step #2

Fork the following agent pipes in Langbase Studio. These agent pipes will power CodeAlchemist:

- Code Alchemist: Decision maker pipe agent. Analyze user prompt and decide which pipe agent to call.

- React Copilot: Generates a single React component for a given user prompt.

- Database Architect: Generates optimized SQL for a query or entire database schema

Step #3

Create a new file app/api/generate/route.ts. This API route will generate the AI response for the user prompt. Please add the following code:

API route

import { runCodeGenerationAgent } from '@/utils/run-agents';

import { validateRequestBody } from '@/utils/validate-request-body';

export const runtime = 'edge';

/**

* Handles the POST request for the specified route.

*

* @param req - The request object.

* @returns A response object.

*/

export async function POST(req: Request) {

try {

const { prompt, error } = await validateRequestBody(req);

if (error || !prompt) {

return new Response(JSON.stringify(error), { status: 400 });

}

const { stream, pipe } = await runCodeGenerationAgent(prompt);

if (stream) {

return new Response(stream, {

headers: {

pipe

}

});

}

return new Response(JSON.stringify({ error: 'No stream' }), {

status: 500

});

} catch (error: any) {

console.error('Uncaught API Error:', error.message);

return new Response(JSON.stringify(error.message), { status: 500 });

}

}

Here is a quick explanation of what's happening in the code above:

- Import

runCodeGenerationAgentfunction fromrun-agents.tsfile. - Import

validateRequestBodyfunction fromvalidate-request-body.tsfile. - Define the

POSTfunction that handles the POST request made to the/api/generateendpoint. - Validate the request body using the

validateRequestBodyfunction. - Call the

runCodeGenerationAgentfunction with the user prompt. - Return the response stream and pipe name.

You can find all these functions in the utils directory of the CodeAlchemist source code.

Step #4

You are building a ReAct based architecture which means the sytem first reason over the info it has and then decide to act.

The Code Alchemist agent pipe is a decision-making agent. It contains two tool calls, runPairProgrammer and runDatabaseArchitect, which are called depending on the user's query.

Create a new file app/utils/run-agents.ts. This file will contain the logic to call all the pipe agents and stream the final response back to the user. Please add the following code:

run-agents.ts

import 'server-only';

import { Langbase, getToolsFromRunStream } from 'langbase';

const langbase = new Langbase({

apiKey: process.env.LANGBASE_API_KEY!

});

/**

* Runs a code generation agent with the provided prompt.

*

* @param prompt - The input prompt for code generation

* @returns A promise that resolves to an object containing:

* - pipe: The name of the pipe used for generation

* - stream: The output stream containing the generated content

*

* @remarks

* This function processes the prompt through a langbase pipe and handles potential tool calls.

* If tool calls are detected, it delegates to the appropriate PipeAgent.

* Otherwise, it returns the direct output from the code-alchemist pipe.

*/

export async function runCodeGenerationAgent(prompt: string) {

const {stream} = await langbase.pipes.run({

stream: true,

name: 'code-alchemist',

messages: [{ role: 'user', content: prompt }],

})

const [streamForCompletion, streamForReturn] = stream.tee();

const toolCalls = await getToolsFromRunStream(streamForCompletion);

const hasToolCalls = toolCalls.length > 0;

if(hasToolCalls) {

const toolCall = toolCalls[0];

const toolName = toolCall.function.name;

const response = await PipeAgents[toolName](prompt);

return {

pipe: response.pipe,

stream: response.stream

};

} else {

return {

pipe: 'code-alchemist',

stream: streamForReturn

}

}

}

type PipeAgentsT = Record<string, (prompt: string) => Promise<{

stream: ReadableStream<any>,

pipe: string

}>>;

export const PipeAgents: PipeAgentsT = {

runPairProgrammer: runPairProgrammerAgent,

runDatabaseArchitect: runDatabaseArchitectAgent

};

async function runPairProgrammerAgent(prompt: string) {}

async function runDatabaseArchitectAgent(prompt: string) {}

Let's go through the above code.

- Import

LangbaseandgetToolsFromRunStreamfrom the Langbase SDK. - Initialize the Langbase SDK with the API key.

- Define the

runCodeGenerationAgentfunction that sends the prompt through thecode-alchemistagent. - Inside

runCodeGenerationAgent, get the tools from the stream using thegetToolsFromRunStreamfunction. - Check if there are any tool calls in the stream.

- If there are tool calls, call the appropriate pipe agent. In this example, only one pipe agent will be called by the decision-making agent.

- If there are no tool calls, return the direct output from the

code-alchemistpipe.

Step #5

The React Copilot is a code generation pipe agent. It takes the user prompt and generates a React component based on it. It also writes clear and concise comments and use Tailwind CSS classes for styling.

You have already defined runPairProgrammerAgent in the previous step. Let's write its implementation. Add the following code to the run-agents.ts file:

runPairProgrammerAgent function code

/**

* Executes a pair programmer agent with React-specific configuration.

*

* @param prompt - The user input prompt to be processed by the agent

* @returns {Promise<{stream: ReadableStream, pipe: string}>} An object containing:

* - stream: A ReadableStream of the agent's response

* - pipe: The name of the pipe being used ('react-copilot')

*

* @example

* const result = await runPairProgrammerAgent("Create a React button component");

* // Use the returned stream to process the agent's response

*/

async function runPairProgrammerAgent(prompt: string) {

const { stream } = await langbase.pipes.run({

stream: true,

name: 'react-copilot',

messages: [{ role: 'user', content: prompt }],

variables: {

framework: 'React',

}

});

return {

stream,

pipe: 'react-copilot'

};

}

Let's break down the above code:

- Called

react-copilotpipe agent with the user prompt andReactas a variable. - Returned the stream and pipe as

react-copilot.

Step #6

The Database Architect pipe agent generates SQL queries. It takes the user prompt and generates either SQL query or whole database schema. It automatically incorporate partitioning strategies if necessary and also indexing options to optimize query performance.

You have already defined runDatabaseArchitectAgent in the step 4. Let's write its implementation. Add the following code to the run-agents.ts file:

runDatabaseArchitectAgent function code

/**

* Executes the Database Architect Agent with the given prompt.

*

* @param prompt - The input prompt string to be processed by the database architect agent

* @returns An object containing:

* - stream: The output stream from the agent

* - pipe: The name of the pipe used ('database-architect')

* @async

*/

async function runDatabaseArchitectAgent(prompt: string) {

const {stream} = await langbase.pipes.run({

stream: true,

name: 'database-architect',

messages: [{ role: 'user', content: prompt }]

})

return {

stream,

pipe: 'database-architect'

};

}

Here is a quick explanation of what's happening in the code above:

- Call the

database-architectpipe agent with the user prompt. - Return the stream and pipe as

database-architect.

Step #7

Now that you have all the pipes in place, you can call the /api/generate endpoint to either generate a React component or SQL query based on the user prompt.

You will write a custom React hook useLangbase that will call the /api/generate endpoint with the user prompt and handle the response stream.

use-langbase.ts hook

import { getRunner } from 'langbase';

import { FormEvent, useState } from 'react';

const useLangbase = () => {

const [loading, setLoading] = useState(false);

const [completion, setCompletion] = useState('');

const [hasFinishedRun, setHasFinishedRun] = useState(false);

/**

* Executes the Code Alchemist agent with the provided prompt and handles the response stream.

*

* @param {Object} params - The parameters for running the Code Alchemist agent

* @param {FormEvent<HTMLFormElement>} [params.e] - Optional form event to prevent default behavior

* @param {string} params.prompt - The prompt to send to the Code Alchemist agent

* @param {string} [params.originalPrompt] - Optional original prompt text

*

* @throws {Error} When the API request fails or returns an error response

*

* @returns {Promise<void>}

*/

async function runCodeAlchemistAgent({

e,

prompt,

}: {

prompt: string;

e?: FormEvent<HTMLFormElement>;

}) {

e && e.preventDefault();

// if the prompt is empty, return

if (!prompt.trim()) {

console.info('Please enter a prompt first.');

return;

}

try {

setLoading(true);

setHasFinishedRun(false);

// make a POST request to the API endpoint to call AI pipes

const response = await fetch('/api/generate', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({ prompt })

});

// if the response is not ok, throw an error

if (!response.ok) {

const error = await response.json();

console.error(error);

return;

}

// get the response body as a stream

if (response.body) {

const runner = getRunner(response.body);

// const stream = fromReadableStream(response.body);

runner.on('content', (content) => {

content && setCompletion(prev => prev + content);

});

}

} catch (error) {

console.error('Something went wrong. Please try again later.');

} finally {

setLoading(false);

setHasFinishedRun(true);

}

}

return {

loading,

completion,

hasFinishedRun,

runCodeAlchemistAgent

};

};

export default useLangbase;

Here's what you have done in this step:

- Create a custom React hook

useLangbasethat will call the/api/generateendpoint with the user prompt. - Use the

getRunnerfunction from the Langbase SDK to get the response body as a stream. - Listen for the

contentevent on the runner and update thecompletionstate with the generated content.

You can try out the live demo of the CodeAlchemist here.

- Build something cool with Langbase APIs and SDK.

- Join our Discord community for feedback, requests, and support.