Integrations: Azure OpenAI

Learn how to use Azure OpenAI Models in Langbase

Azure OpenAI provides custom deployments of OpenAI models for enhanced security, regional compliance, and control. Langbase fully supports Azure OpenAI models, making integration seamless.

Follow this step-by-step guide to use Azure OpenAI models in Langbase.

Step #1

If you have set up and deployed an Azure OpenAI model, you can skip to Step 3.

Login and create an Azure OpenAI resource here.

Step #2

Once you have created an Azure OpenAI resource, deploy a model within it.

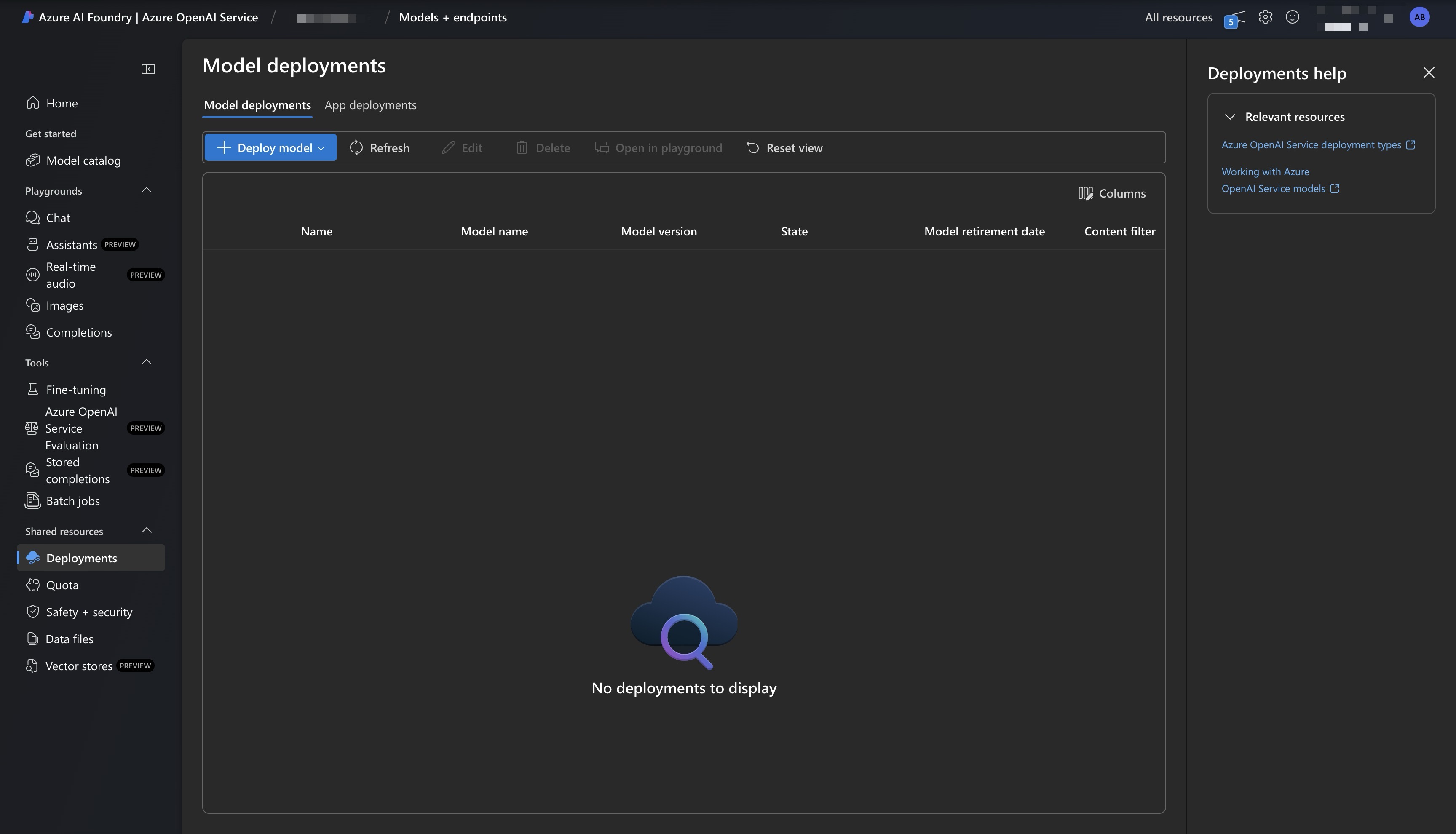

Go to your Azure OpenAI resource, navigate to Deployments and click on Deploy model.

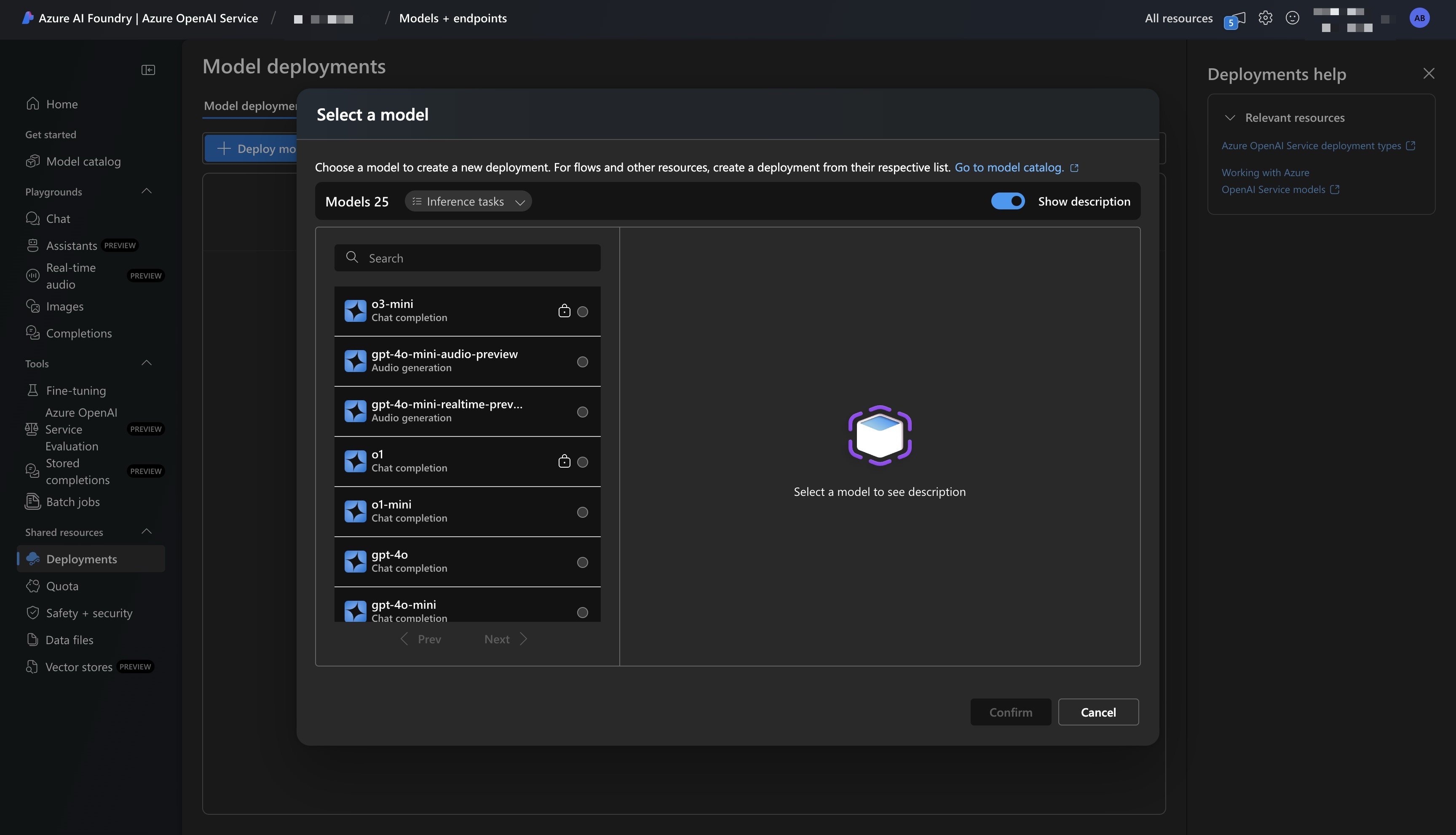

Select the model you want to deploy, follow the deployment instructions, and complete the process.

Step #3

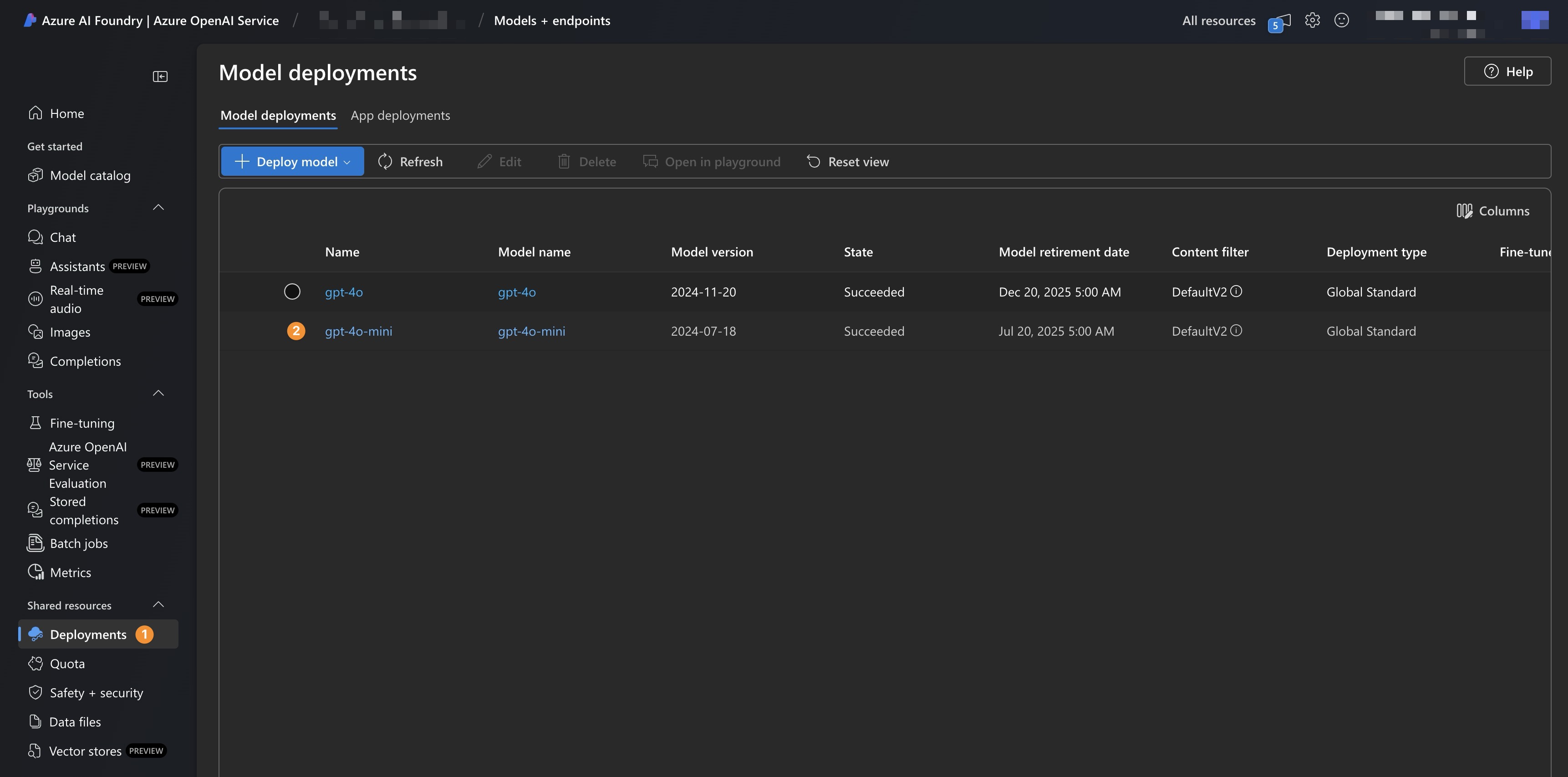

Go to ai.azure.com, navigate to your Deployments. Click on the deployed model you want to use in Langbase.

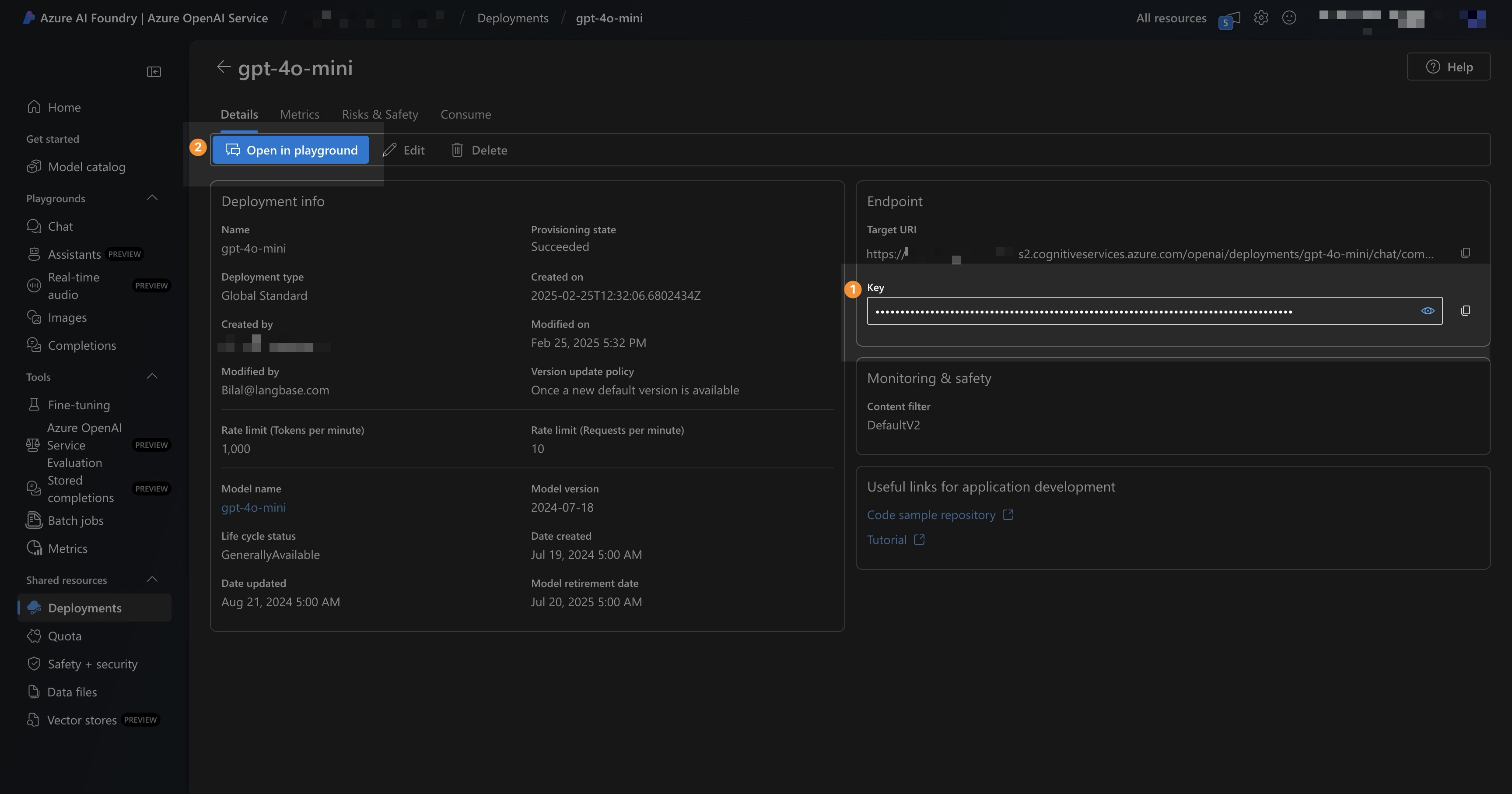

Inside the deployed model page, copy your Key, then click Open in playground.

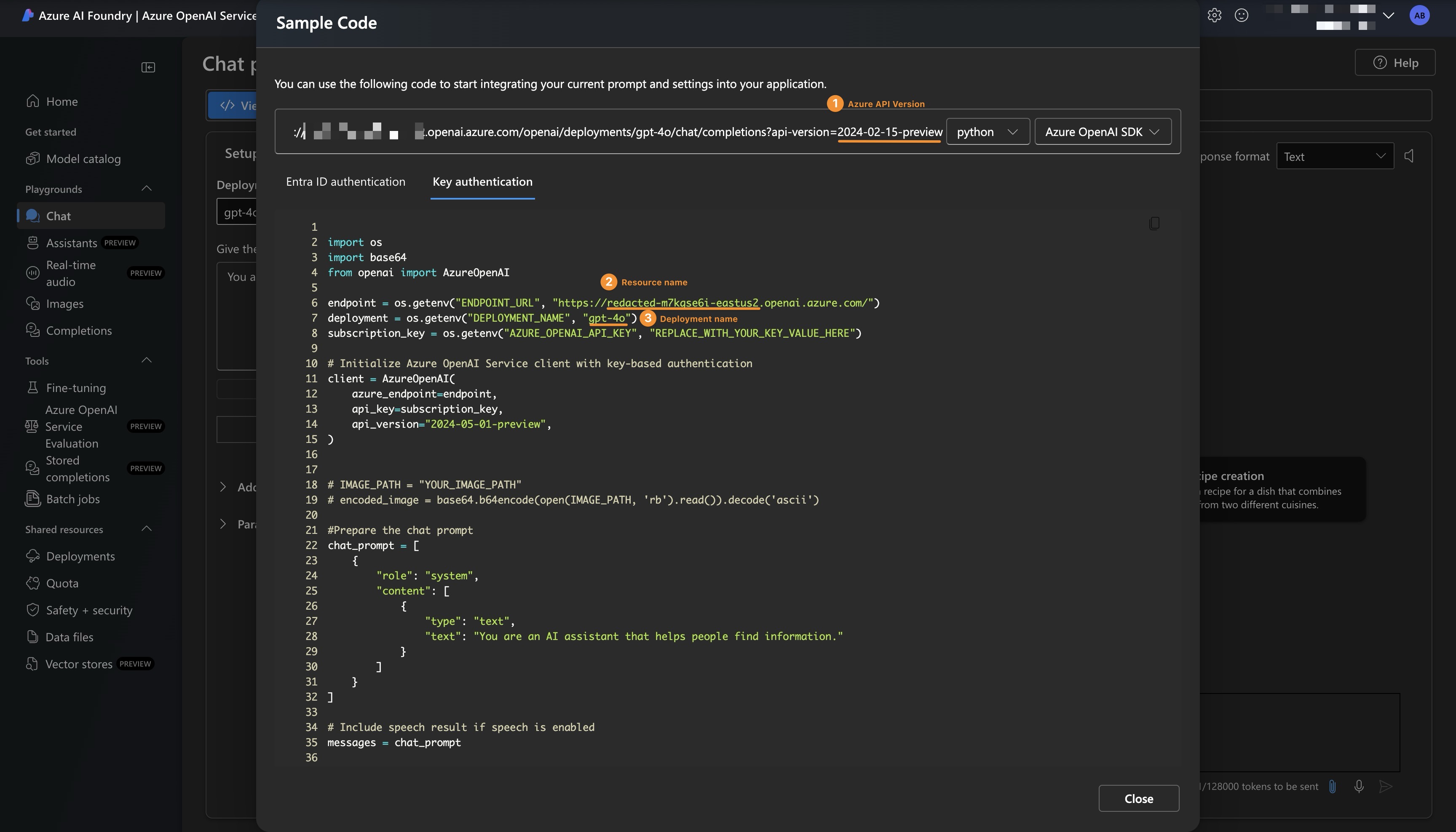

In the playground, click View Code, select Key Authentication and copy the following three as shown in the screenshot below:

- API version

- Resource name

- Deployment name

Step #4

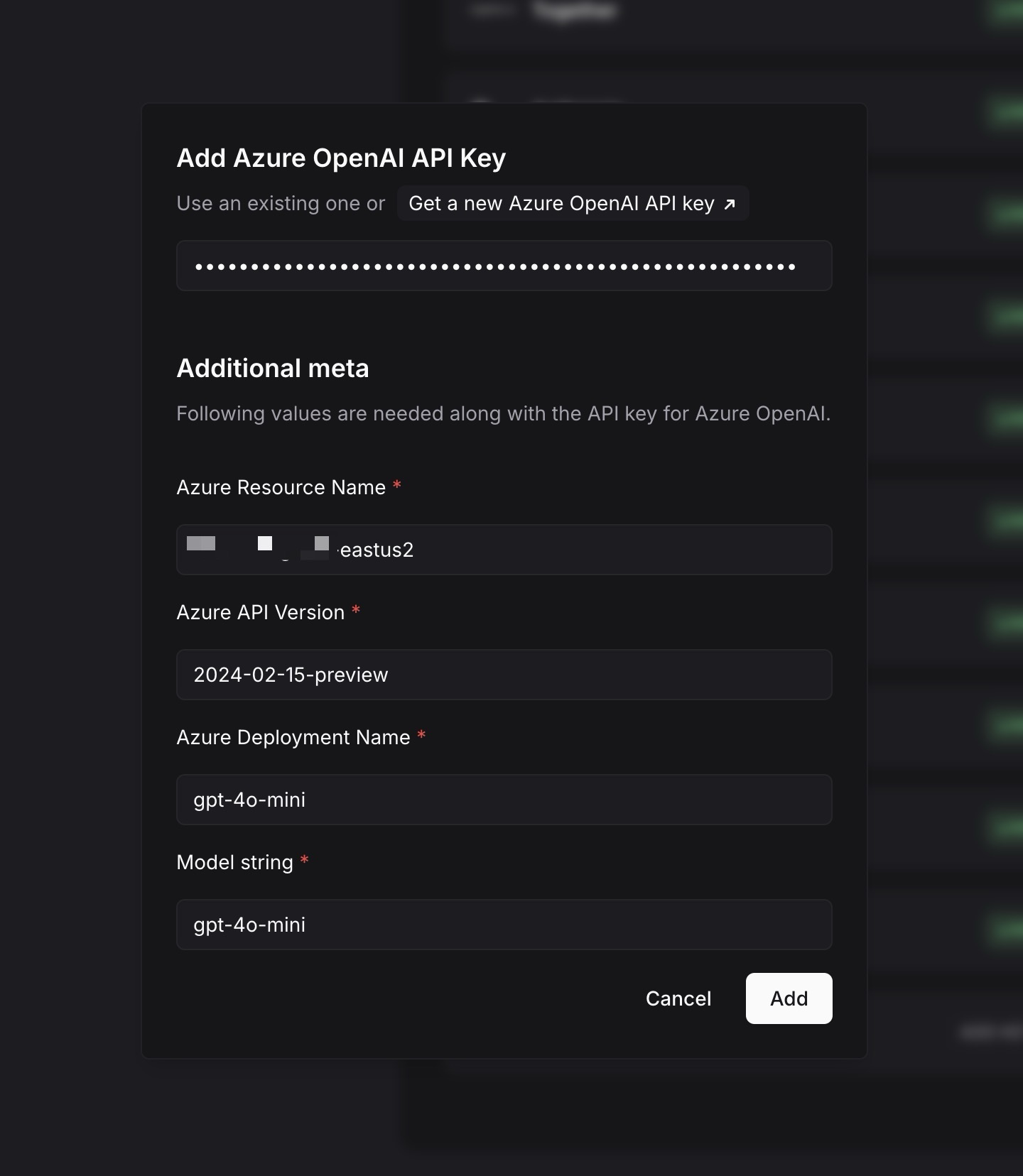

In Langbase Studio, navigate to Settings > LLM API Keys from the sidebar. Select Azure OpenAI, then enter the Azure OpenAI credentials you copied in Step 3:

- API Key: Your Azure OpenAI Key

- Resource Name: Your Azure OpenAI Resource Name

- API Version: Your Azure OpenAI API Version

- Deployment Name: Your Azure OpenAI Deployment Name

- Model Name: The OpenAI Model Name (often the same as Deployment Name)

Click Add to save the configuration. Your credentials will be encrypted and stored securely.

You are all set to use Azure OpenAI models in Langbase. Go to Pipes, create a new pipe, select an Azure OpenAI model, and start using it in Langbase.