How to use tool calling with Chat API?

Follow this quick guide to learn how to use tool calling with the Chat API in Langbase.

We will not use stream mode for this example.

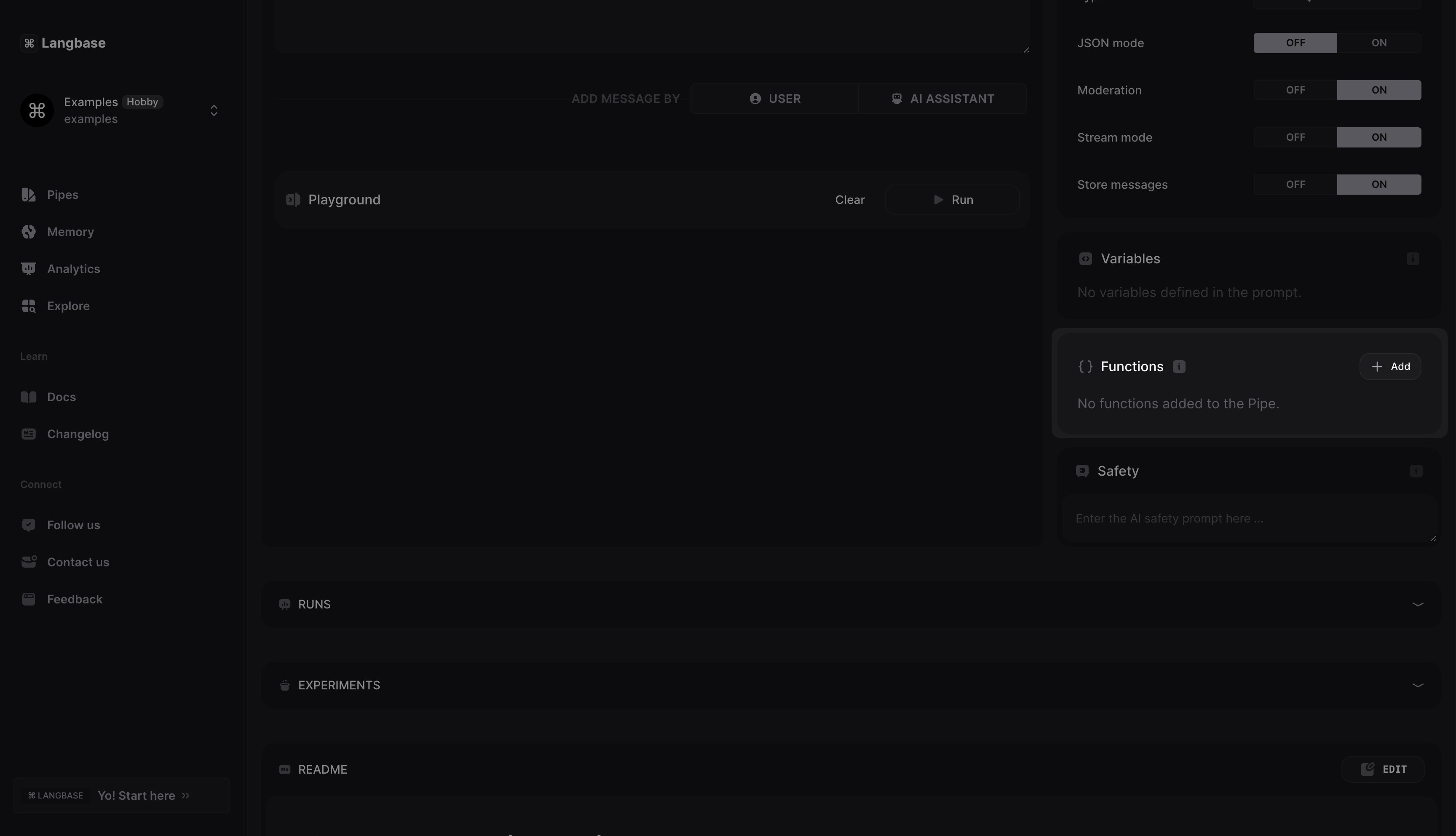

Step #0

Create a new chat type Pipe or open an existing chat Pipe in your Langbase account. Go ahead and turn off the stream mode for this example and deploy the Pipe.

Alternatively, you can fork this tool call chat Pipe Pipe and skip to step 3.

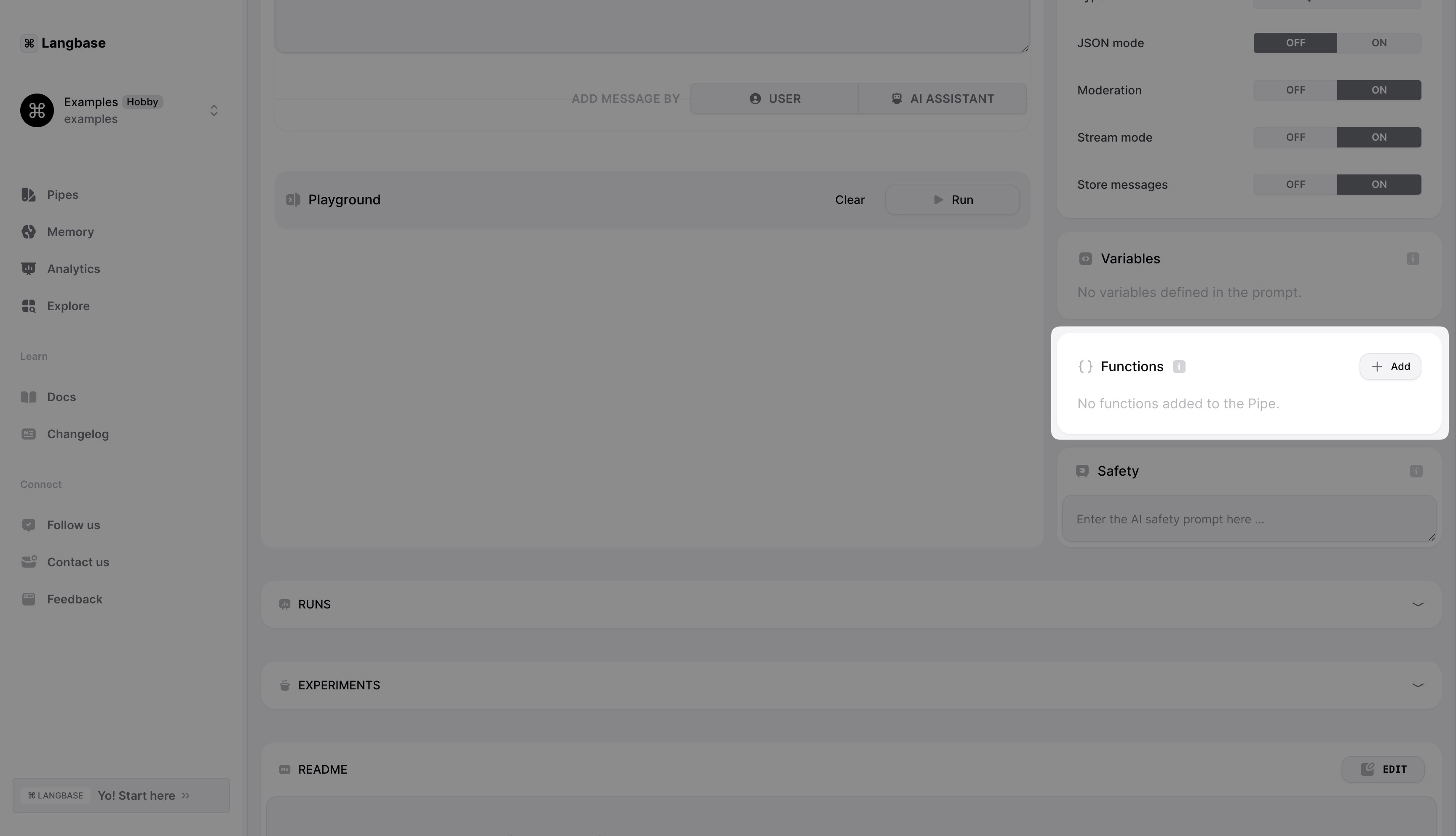

Step #1

Tool calling is available with OpenAI models. So select any of the available OpenAI models in your Pipe.

Step #2

Let's add a tool to get the current weather of a given location. Click on the Add button in the Tools section to add a new tool.

This will open a modal where you can define the tool. The tool we are defining will take two arguments:

- location

- unit.

The location argument is required and the unit argument is optional.

The tool definition will look something like the following.

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather of a given location",

"parameters": {

"type": "object",

"required": [

"location"

],

"properties": {

"unit": {

"enum": [

"celsius",

"fahrenheit"

],

"type": "string"

},

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

}

}

}

}

}

Go ahead and deploy the Pipe to production.

Step #3

Go ahead and copy your Pipe API key from the Pipe API page. You will need this key to call the Generate API.

Now let's create an index.js file where we will define get_current_weather function and also call the Pipe.

const get_current_weather = ({ location, unit }) => {

// get weather for the location and return the temperature

};

const tools = {

get_current_weather

};

(async () => {

const messages = [

{

role: 'user',

content: 'Whats the weather in SF?'

}

];

// replace this with your Pipe API key

const pipeApiKey = ``;

const res = await fetch('https://api.langbase.com/beta/chat', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

Authorization: `Bearer ${pipeApiKey}`

},

body: JSON.stringify({

messages

})

});

})();

Because the user prompt requires the current weather of San Francisco, the model will respond with a tool call like the following:

{

"role": "assistant",

"content": null,

"tool_calls": [

{

"id": "call_u28sPmmCAWkop0OdgDYDJ9OG",

"type": "function",

"function": {

"name": "get_current_weather",

"arguments": "{\"location\": \"San Francisco\"}"

}

}

]

}

Step #4

To check if the model has called the tool, you can check the tool_calls array in the model's response. If it exists, call the functions specified in the tool_calls array and send the response back to Langbase.

(async () => {

const messages = [

{

role: 'user',

content: 'Whats the weather in SF?',

},

];

// replace this with your Pipe API key

const pipeApiKey = ``;

const res = await fetch('https://api.langbase.com/beta/chat', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

Authorization: `Bearer ${pipeApiKey}`,

},

body: JSON.stringify({

messages,

}),

});

const data = await res.json();

// get the threadId from the response headers

const threadId = await res.headers.get('lb-thread-id');

const { raw } = data;

// get the response message from the model

const responseMessage = raw.choices[0].message;

// get the tool calls from the response message

const toolCalls = responseMessage.tool_calls;

if (toolCalls) {

const toolMessages = [];

// call all the functions in the tool_calls array

toolCalls.forEach(toolCall => {

const toolName = toolCall.function.name;

const toolParameters = JSON.parse(toolCall.function.arguments);

const toolFunction = tools[toolName];

const toolResponse = toolFunction(toolParameters);

toolMessages.push({

tool_call_id: toolCall.id, // required: id of the tool call

role: 'tool', // required: role of the message

name: toolName, // required: name of the tool

content: JSON.stringify(toolResponse), // required: response of the tool

});

});

// send the tool responses back to the API

const res = await fetch('https://api.langbase.com/beta/chat', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

Authorization: `Bearer ${pipeApiKey}`,

},

body: JSON.stringify({

messages: toolMessages,

threadId,

}),

});

const data = await res.json();

}

})();

This is what a typical model response will look like after calling the tool:

{

"completion": "The current temperature in San Francisco, CA is 25°C.",

"raw": {

"id": "chatcmpl-9hQG8k2pD1A6JoFKQ0O6BKKvJzogS",

"object": "chat.completion",

"created": 1720136072,

"model": "gpt-4o-2024-05-13",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "The current temperature in San Francisco, CA is 25°C."

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 121,

"completion_tokens": 14,

"total_tokens": 135

},

"system_fingerprint": "fp_ce0793330f"

}

}

And that's it! You have successfully used tool calling with the Chat API in Langbase.